A classmate of mine who got his Ph.D. in natural-language processing and now works at Google told me the following. It might be out of date and I might be remembering it wrong. But I just did a little, er, googling, and this seems to be passably well corroborated by other sources.

How it works

Google Translate is completely statistical. It has no model of grammar, syntax, or meaning. It works by correlating sequences of up to five consecutive words found in texts from both languages.

Here's the conceit. Ignore all the complexity, structure, and meaning of language and pretend that people speak just by randomly choosing one word after another. The only question now is how to calculate the probabilities. A simple way is to say that the probability of each word is determined by the previous word spoken. For example, if the last word you said was "two", there is a certain probability that your next word will be "or". If you just said "or", there is a certain probability that your next word will be "butane". You could calculate these word-to-next-word probabilities from their frequencies in real text. If you generate new text according to these probabilities, you'll get random but just slightly coherent gibberish: TWO OR BUTANE GAS AND OF THE SAME. That's called a Markov model. If you use a window of more words, say five, the resulting gibberish will look more likely to have been written by a schizophrenic than by an aphasic. A variation called a hidden Markov model introduces "states", where each state has its own set of probabilities for "emitting" words as well as a set of "transition" probabilities for what will be the next state. This can simulate a little more of the influence of context on each word choice.

Google Translate's algorithm is proprietary, and I think it's a little more sophisticated than hidden Markov models, but the principle is the same. They let computers run on lots of text in each language, assigning probabilities to word sequences according to the principle "Assuming this text was generated by a random gibberish-generator, what probabilities maximize the chance that this exact text would have been generated?" Manually translated texts provide data to line up word sequences in one language with word sequences in another. Translation, then, is finding the highest-probability sequence from one language's gibberish-generator that corresponds to whatever makes the other language's gibberish-generator produce the input text.

What it's reliable for

Consequently, you won't learn much about what Google Translate is reliable for by trying out different grammatical structures. If you're lucky, all you'll get from that is an ELIZA effect. What Google Translate is most reliable for is translating documents produced by the United Nations between the languages in use there. This is because UN documents have provided a disproportionately large share of the manually translated texts from which Google Translate draws its five-word sequences.

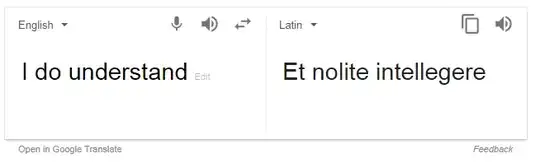

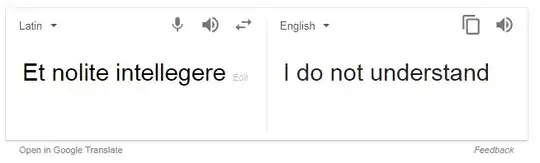

Witness what happens when I type this in:

À l'exception de ce qui peut être convenu dans les accords particuliers de tutelle conclus conformément aux Articles 77, 79 et 81 et plaçant chaque territoire sous le régime de tutelle, et jusqu'à ce que ces accords aient été conclus, aucune disposition du présent Chapitre ne sera interprétée comme modifiant directement ou indirectement en aucune manière les droits quelconques d'aucun État ou d'aucun peuple ou les dispositions d'actes internationaux en vigueur auxquels des Membres de l'Organisation peuvent être parties.

It gives me:

Except as may be agreed upon in the special guardianship agreements concluded in accordance with Articles 77, 79 and 81 and placing each territory under the trusteeship system, and until such agreements have been concluded, This Chapter shall not be construed as directly or indirectly modifying in any way the rights of any State or any people or the provisions of international instruments in force to which Members of the Organization may be parties.

Perfect! (Almost.)

This is why its Latin translations tend to be so poor: it has a very thin corpus of human-made translations of Latin on which to base its probability estimates—and, of course, it's using an approach that's based on probabilities of word sequences, disregarding grammar and meaning.

So, until the United Nations starts doing its business in Latin, Google Translate is not going to do a very good job. And even then, don't expect much unless you're translating text pasted from UN documents.

The five-word window

Here's an illustration of the five-word window. I enter:

Pants, as you expected, were worn.

Pants were worn.

Pants, as you expected, are worn.

The Latin translations (with my manual translations back to English):

Anhelat quemadmodum speravimus confecta. (He is panting just as we hoped accomplished.)

Braccas sunt attriti. (The trousers have been worn away [like "attrition"].)

Anhelat, ut spe teris. (He is panting, just as, by hope, you are wearing [something] out.)

Notice that the first and third sentences border on ungrammatical nonsense. The second sentence makes sense but it's ungrammatical; it should be Braccae sunt attritae. There aren't any five-word sequences in Google Translate's English database that line up well with "pants as you expected were/are," so it's flailing. Notice that in the third sentence, by the time it got to "worn", it had forgotten which sense of "pants" it chose at the start of the sentence. Or rather, it didn't forget, because it never tracked it. It only tracked five-word sequences.

So, whether the sentence makes sense sort of affects the translation, but it's worse than that. What matters is exact, word-for-word matching with texts in the database.

Entering Latin into Google Translate (with words changed from the first sentence shown in bold):

Abraham vero aliam duxit uxorem nomine Cetthuram.

Quintilianus vero aliam duxit uxorem nomine Cetthuram.

Abraham vero aliam duxit uxorem nomine Iuliam.

Abraham vero canem duxit uxorem nomine Fido.

English output:

And Abraham took another wife, and her name was Keturah.

Quintilian, now the wife of another wife, and her name was Keturah.

And Abraham took another wife, and the name of his wife, a daughter named Julia.

And Abraham took a wife, and brought him to a dog by the name of Fido.

The Vulgate and the ASV translation (or similar) would appear to be among Google Translate's source texts. Notice what happens when the input is off by as little as one word.

The above explains just enough so that a layperson can understand what Google Translate is good at, what it's bad at, and why—and so they won't be misled by the results of experimenting with different grammatical structures. If you're interested in more rigorous and thorough information about the full complexities of this approach, google for "statistical machine translation". Some further info is here, including Google's rollout, now in progress, of an entirely new translation algorithm (which hasn't reached Latin yet).

.and for 7 and 8 with the trailing.so I was able to get successful translations of all 8 sentences out of it. – ShreevatsaR Jul 03 '17 at 17:51