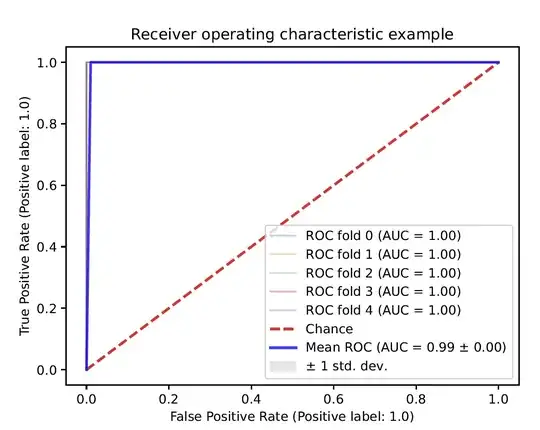

how is it to explain that ROC illustrates such a perfect classificator however other metrics represent something different?

The evalaution was done on CV of 5.

F1: 0.724770642201835

Precision: 0.797979797979798

Recall: 0.6638655462184874

false positive rate: 0.0007034451224873819

true positive rate: 0.6638655462184874

F1: 0.8571428571428572

Precision: 0.7878787878787878

Recall: 0.9397590361445783

false positive rate: 8.793064031092274e-05

true positive rate: 0.9397590361445783

F1: 0.711111111111111

Precision: 0.6530612244897959

Recall: 0.7804878048780488

false positive rate: 0.00031655030511932187

true positive rate: 0.7804878048780488

F1: 0.8409090909090908

Precision: 0.7551020408163265

Recall: 0.9487179487179487

false positive rate: 7.03445122487382e-05

true positive rate: 0.9487179487179487

F1: 0.7380952380952381

Precision: 0.6326530612244898

Recall: 0.8857142857142857

false positive rate: 0.0001406890244974764

true positive rate: 0.8857142857142857