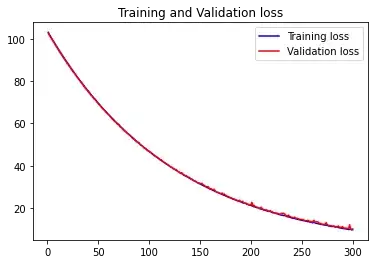

I know the basics of machine learning from Andrew Ng's course, but I'm quite new to the practice with tensorflow. I'm creating a model with 12288 nodes in the input layer, 100 in the hidden, and 2 in the output. It receives low resolution images and classifies if they're a cat or not, but when I plot the graphs, they look like this:

I tried to find causes for this in sites that offer some plot analysis like this one, but I wasn't successful. I also know that I can mess with learning rate, regularization, number of nodes in hidden layer, etc; but I'd like to undestand the causes of this problem. So what could cause a accuracy graph that oscillates a LOT but a consistent decrease loss graph?

More info:

The 12288 input nodes come from a low resolution rgb image (64 * 64 * 3 = 12288), one for each pixel of each color. Their values originally are from 0 to 255, but I normalized them from 0 to 1, with a division by 255.

my 3 layers are as follows:

model.add(layers.Flatten())model.add(layers.Dense(100, kernel_regularizer=tf.keras.regularizers.l2(0.1), kernel_initializer="random_uniform", bias_initializer="random_uniform", activation="relu"))model.add(layers.Dense(2, kernel_initializer="random_uniform", bias_initializer="random_uniform", activation="softmax"))my model.compile is as follows:

model.compile(optimizer=tf.keras.optimizers.SGD(learning_rate=0.005), loss="categorical_crossentropy", metrics=["accuracy"])my model.fit is as follows:

results = modelo.fit(Xtrainset, ytrainset, validation_data = (Xtestset, ytestset), batch_size = 64, epochs=300, verbose=1)

Both curves for the training and validation sets are very unstable, meaning that small changes in your network's weights are causing large changes in the predictions.

You mention that your network consists of 12,000 inputs. Does this mean you are inputing the images as simply a vector of pixel values? Also, what is your loss function and what optimization method are you using?

– KirkD_CO Jul 22 '22 at 18:19