I am working on binary classification problem and there is 99.99% data redundancy. When I looked into the distribution of the classes both seem to be the same. Class imbalance is also part of the problem. Following is my analysis on the dataset:

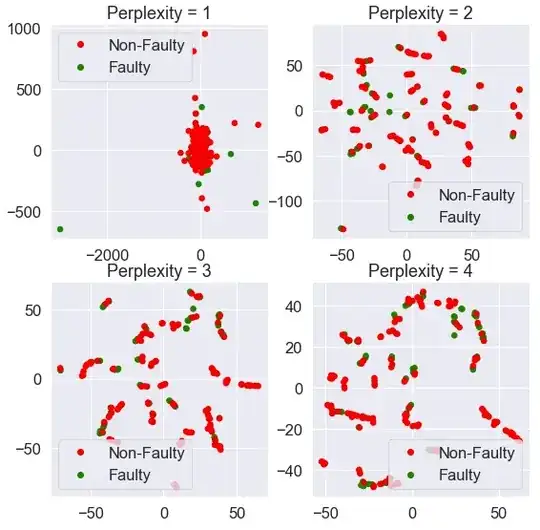

- t-SNE Plots

Generating t-SNE plots for different values (>4) produce same kind of graph where classes are on top of each other.

- PCA Analysis

Top PCA components have very low variances spread across all of the features (total features=42).

Now I trained xgboost classifier with smote upsampling and top 5 PCA components (top 5 explains 80% of the variance). I did k fold cross validation with hyper parameter tuning. I analyzed decision boundaries for different of values of each xgboost hyper parameter. My F1 score is 0.11, which is not a good score.

Can anyone share thoughts on the current problem with all the information I provided? My main concern is if the underlying nature of the distribution might not be good from learning point of view. Because I know even if I deduplicate and train the model I might get good results but when tested on the real world data, I will have too many points from both classes which have exactly the same values (distribution).