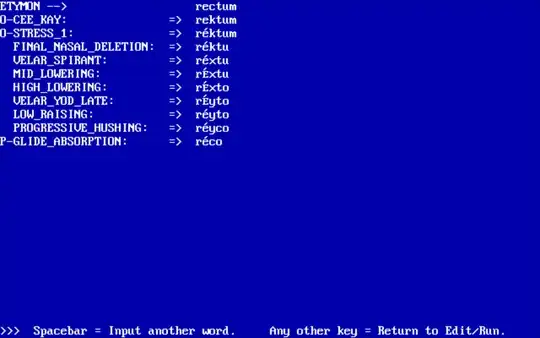

So far, I have found PHONO33 for DOS, which is a quite old yet impressive program. Though overly strict and simplistic, applying rules in a strict order, which results in weird outcomes ×D

It includes models for Pig Latin and Spanish, and I'm not quite sure what traction this software did gain actually and what other models exist for it. The model format looks definitely ad-hoc and not compatible with anything else.

It includes models for Pig Latin and Spanish, and I'm not quite sure what traction this software did gain actually and what other models exist for it. The model format looks definitely ad-hoc and not compatible with anything else.

Another tool like that is sca², which is a rather simple playground and requires manual fiddling with uncoordinated lexicons and rule sets.

And finally, the DiaDM project, which I found here on Linguistics SE. It's probably the closest to what I'm looking for: a large database with flexible tooling oriented to collaboration by many contributors and attempting to collect data on many language families. Unfortunately, the project seem to be stalled despite existing for more than a decade, the datasets are far from being comprehensive, and the UI is quite rough and uses deprecated Flash in some places. Also, it seems like the editor access is restricted there and all the data are collected in a closed database with no export tools, which means they're endangered and may go off if something happens to the website.

Do I miss any other decent projects?

As mentioned, I have a vision of an ideal system for that purpose, which would be characterized by:

- Open source and open data formats, so the data can be reused in other projects or imported from other projects. Do any de-facto standard formats for this area exist actually? I'm not even sure if there exists an agreed-upon format for expressing phonological rules in a machine-readable way.

- High flexibility, which involves describing uncertain rule order, uncertain conditions of how rules are applied, uncertain word origins and borrowings, etc. Whenever possible, multiple paths and variants should be provided. The system should be able to acknowledge the actually attested word changes, check if they fit into the formal rules, expose the flaws with current theories and assist with coming up with more correct ones.

- Metadata on everything: authors, presumed date ranges, source works, assumed certainty, hypothetic relations, etc., etc.

- Being suitable both for expressing and systematizing already existing knowledge on historical linguistics and for new researches. Most of simpler tools I've seen are rather focused on the latter, and existing knowledge on sound shifts exists mostly in a written form pleasant to humanitarians, made in times before prominent scientists like Zaliznyak and Chomsky turned linguistics into an exact science. I'd like to generate visual charts from existing data in a glimpse to impress mere mortals with how deeply related our seemingly different languages are.

I believe such a system would make a revolution in computational historical linguistics akin to how tools like Wolfram Mathematica/Alpha and MATLAB revolutionized the computational science. How close the existing tooling is to that?