I have a web site with a lot of web-pages and images. I created the sitemaps (grouped into single sitemaps index file). I submitted those sitemaps to Google but the indexing process is disappointingly slow:

Sitemaps was submitted on Feb. 4th. Submitting process started on Feb. 8th and so on:

02/09/2014 - Indexed 187 images

02/10/2014 - 956

02/11/2014 - 1180

02/12/2014 - 1196

02/13/2014 - 1198

02/14/2014 - 1192 (!!!)

02/15/2014 - 561 (!!!)

02/16/2014 - 1144

02/17/2014 - 1144

So the images indexing process is weird.

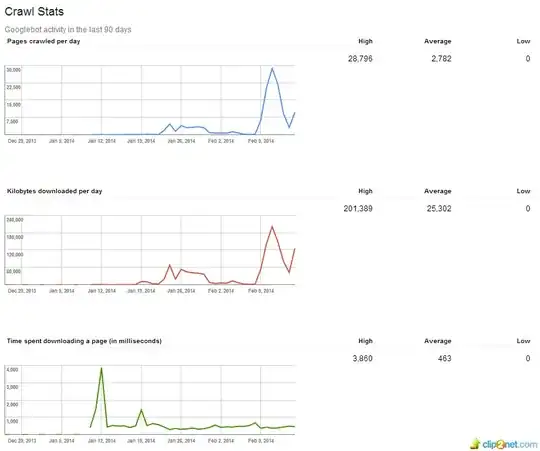

However the crawling process look much more attractive and promising:

Do I need to wait some extra time? Or do I need to take some actions to help Google to index my web-site correctly?

/robots.txtfile at all. Which means that there is no sitemap file too. The picture of the world significantly changed in my mind... – Roman Matveev Feb 17 '14 at 18:07Google doesn't guarantee that we'll index all of your images or use all of the information in your Sitemap.– dan Feb 17 '14 at 18:11