The crawl report shows this: "HTTP (1) In Sitemaps (5) Not found (993) Restricted by robots.txt (487,544)"

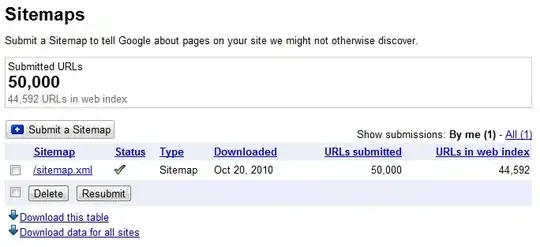

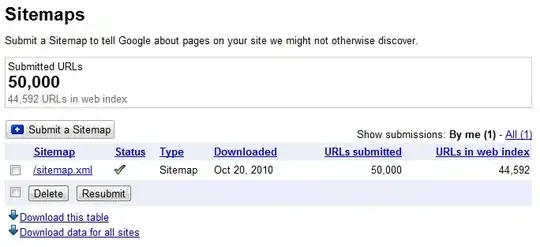

So the discrepancy between 50,000 and 44,592 isn't coming from that. Are there any obvious reasons?

The crawl report shows this: "HTTP (1) In Sitemaps (5) Not found (993) Restricted by robots.txt (487,544)"

So the discrepancy between 50,000 and 44,592 isn't coming from that. Are there any obvious reasons?

I think it is simply that Google does not necessarily index all the URLs submitted. The submitted sitemap is advisory, it is then up to the Search Engines to determine whether those URLs should be (or are worthy to be) indexed.

Or, may be Google just hasn't gotten round to checking/indexing those other pages yet? By look of your screenshot you only submitted the Sitemap today?!

The difference lies in Google's priorities: you may have pages in your sitemap, however, for whatever reason (be it lack of incoming links to deep content, lack of in-site links, delays in posting back index data, et cetera) Google either doesn't have the content indexed or isn't showing you what's actually indexed.

We're aware that we sometimes return erroneous estimates for the number of results that return for a query, and we're working to improve these estimates.

Features: Incorrect search results count

Given that Google doesn't make it a priority to show you exactly how many pages are in its index for a given search, I'd say it's just a matter of Google failing to show you an accurate picture of its index.

Restricted by robots.txt (487,544): 5,408 missing urls can't be in there ? – Chouchenos Oct 21 '10 at 07:52