Unfortunately, our hosting provider experienced 100% data loss, so I've lost all content for two hosted blog websites:

(Yes, yes, I absolutely should have done complete offsite backups. Unfortunately, all my backups were on the server itself. So save the lecture; you're 100% absolutely right, but that doesn't help me at the moment. Let's stay focused on the question here!)

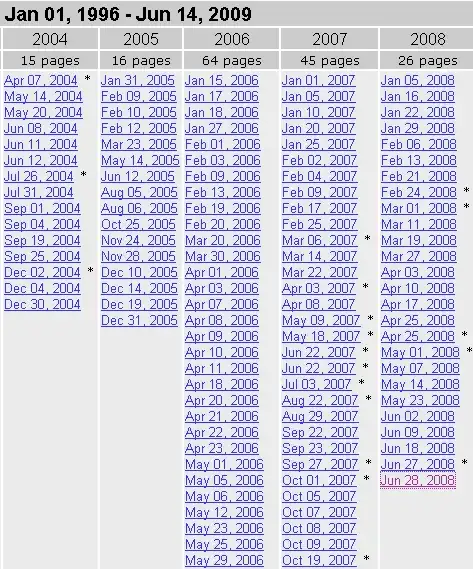

I am beginning the slow, painful process of recovering the website from web crawler caches.

There are a few automated tools for recovering a website from internet web spider (Yahoo, Bing, Google, etc.) caches, like Warrick, but I had some bad results using this:

- My IP address was quickly banned from Google for using it

- I get lots of 500 and 503 errors and "waiting 5 minutes…"

- Ultimately, I can recover the text content faster by hand

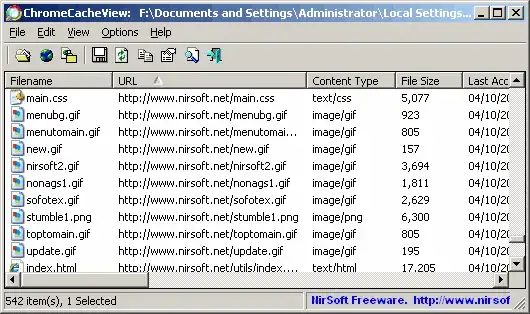

I've had much better luck by using a list of all blog posts, clicking through to the Google cache and saving each individual file as HTML. While there are a lot of blog posts, there aren't that many, and I figure I deserve some self-flagellation for not having a better backup strategy. Anyway, the important thing is that I've had good luck getting the blog post text this way, and I am definitely able to get the text of the web pages out of the Internet caches. Based on what I've done so far, I am confident I can recover all the lost blog post text and comments.

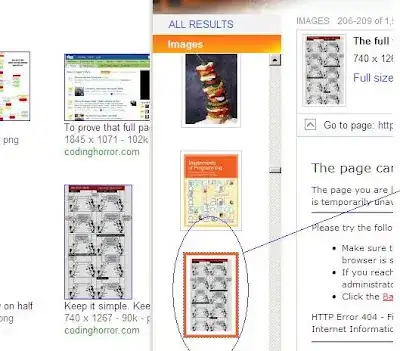

However, the images that go with each blog post are proving…more difficult.

Any general tips for recovering website pages from Internet caches, and in particular, places to recover archived images from website pages?

(And, again, please, no backup lectures. You're totally, completely, utterly right! But being right isn't solving my immediate problem… Unless you have a time machine…)

.

.

http://www.waybackmachine.org/

– Dec 17 '09 at 15:38