My sitemap contains 50K URLs/7,8 MB and this following URL syntax:

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.sitemaps.org/schemas/sitemap/0.9 http://www.sitemaps.org/schemas/sitemap/0.9/sitemap.xsd">

<url>

<loc> https://www.ninjogos.com.br/resultados?pesquisa=vestido, maquiagem, </loc> <lastmod> 2019-10-03T17:12:01-03:00 </lastmod>

<priority> 1.00 </priority>

</url>

</urlset>

The problems are:

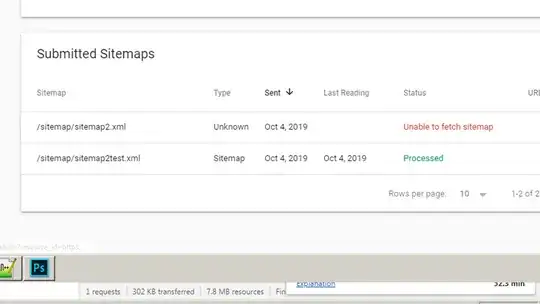

• Search Console says "Sitemap could not be read";

• The Sitemap takes 1 hour to load and Chrome stops working;

• In firefox the Sitemap has downloaded in 1483ms and fully loaded after 5 mins);

Things I've done without sucess:

• Disable GZip compression;

• Delete my .htaccess file;

• Create a test Sitemap with 1K URLs and the same syntax and sent it to Search Console and it's worked but the 50K URLs Sitemap still shows ""unable to fetch Sitemap";

• Tried to inspect the url directly but it gave error and asks to try again later while the 1K urls worked;

• Tried to validate the Sitemap in five different sites (YANDEX, ETC) and all worked without no error/warning

Any light?

%20– Stephen Ostermiller Oct 06 '19 at 01:39