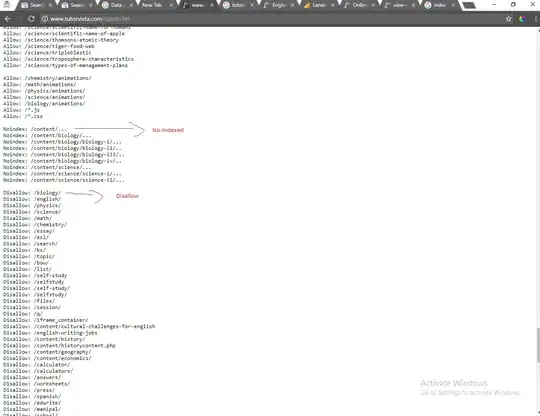

Note that Noindex is not part of the original robots.txt specification. Google supported it as experimental feature (see: How does “Noindex:” in robots.txt work?), but it’s not clear if that is still the case (as they didn’t document it to begin with). But let’s assume it is.

Your robots.txt has two problems.

Empty lines

A record must not contain empty lines. Empty lines are used to separate records.

A conforming bot (which doesn’t identify as Googlebot-Image/Adsbot-Google/Mediapartners-Google) uses this record:

User-agent: *

Allow: /

So none of the following Disallow/Allow/Noindex lines apply.

Of course a bot may try to "fix" this and interpret the following lines to be part of this record (i.e., ignoring the blank lines), but the robots.txt spec doesn’t define this, so I wouldn’t count on it.

... in Noindex values

If Noindex works like Disallow (which we don’t know for sure, as Noindex is not specified/documented, but I guess it wouldn’t make sense to specify it differently), the ... you appended to the values mean that ... must appear in the URLs you want to noindex.

The line

Noindex: /content/biology/...

would apply to a URL like /content/biology/.../foobar, but not to a URL like /content/biology/foobar nor /content/biology/.

So if you want every URL whose paths starts with /content/biology/ to be noindexed, you would have to specify:

Noindex: /content/biology/

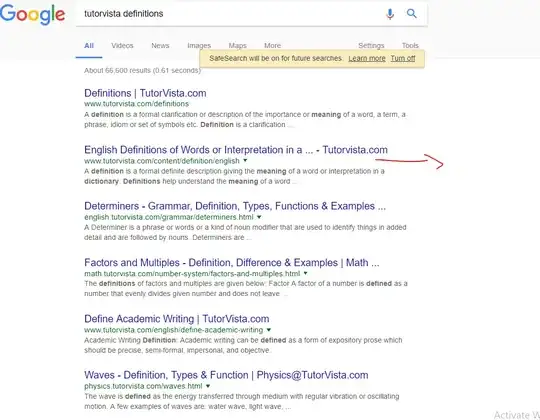

Allow: /biology/is the justification for/biology/abdominal-cavity-organsbeing allowed rather than the root, and because these rules come before the exact disallow rule. – Zhaph - Ben Duguid Sep 12 '17 at 07:14Allow: /biology/animations/above the disallow block, so that will take precedence. – Zhaph - Ben Duguid Sep 12 '17 at 07:17