I'm reading a text file into \LaTex that has two sets of data. An empty line separates the two sets. I'm trying to typeset one set of data into one table, and the second set into a the same table separated by \hline. Is this possible?

Here is my data: (sorry, I don't understand how to \define it in order to make a true mwe...)

10,100

5,100

2,99

0.85,98

0.425,92

0.25,60

0.15,31

0.075,9

0.0274,9.4

0.0176,9.1

0.0107,8.0

0.007,6.9

0.0059,5.6

0.0031,3.7

0.0013,2.5

Here is my mwe:

\documentclass[letterpaper,11pt]{standalone}

\usepackage{readarray}

\begin{document}

\readarraysepchar{,}

\renewcommand\typesetplanesepchar{\\hline}

\renewcommand\typesetrowsepchar{\}

\renewcommand\typesetcolsepchar{&}

\readdef{../01data/data.csv}\data

\readarray\data\array[-,\nrows,\ncols]

\centering

\begin{tabular}{c|c}

Seive& Passing\

size & \

(mm) & (%)\

\hline

\typesetarray\array[1,\nrows,\ncols]\

\hline

\typesetarray\array[2,\nrows,\ncols]\

\end{tabular}

\end{document}

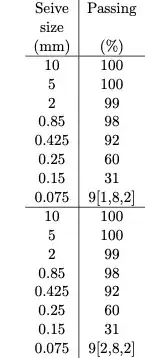

Here is the output

The output I get does not show the second set of data, but rather the first set only. In addition, the output displays some data at the end that is unwanted. Can someone please help me understand what is going on?

readarrayis not the ideal tool for automating this task, because your two datasets contain differing numbers of rows (8 in the first, 7 in the second). A 3-D array inreadarrayis required to have equal numbers of rows and columns in each plane. – Steven B. Segletes May 25 '23 at 17:35