I bought a 24-core processor (AMD Ryzen Threadripper 2970WX) for CPU-intensive workloads like converting large media files and rendering video effects. But it generally uses only 35-55% of the total capacity, even when doing large multi-core jobs over many hours, such as:

- Rendering video effects with Premiere Pro (with or without graphics acceleration enabled)

- Exporting a huge file with Adobe Media Encoder (with or without graphics acceleration enabled)

- Converting a huge video file with Handbrake

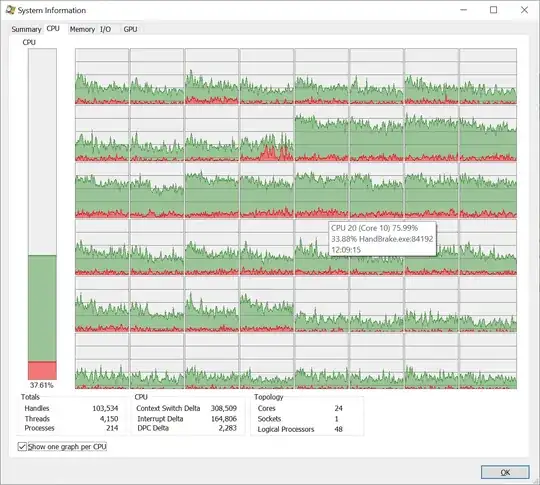

Below are screenshots taken from Process Explorer and Core Temp while converting a huge video file with Handbrake.exe. By hovering over the individual core histograms, I can see that Handbrake.exe is the main consumer of every core, but it seems to be limited to about 33-34% usage (that said, a few minutes ago it seemed to increase to 40% usage per-core for Handbrake, for a while, so it's not completely consistent).

The same is true when using Adobe Media Encoder or Premiere Pro to do a large render job. Process Explorer looks about the same.

Is my CPU being under-utilised, and what can I do to un-throttle it if so? Or is it just something to do with how Process Explorer presents the information, and in reality I'm using the full capacity? I don't know much about CPUs, I just want to make sure I'm getting my money's worth!

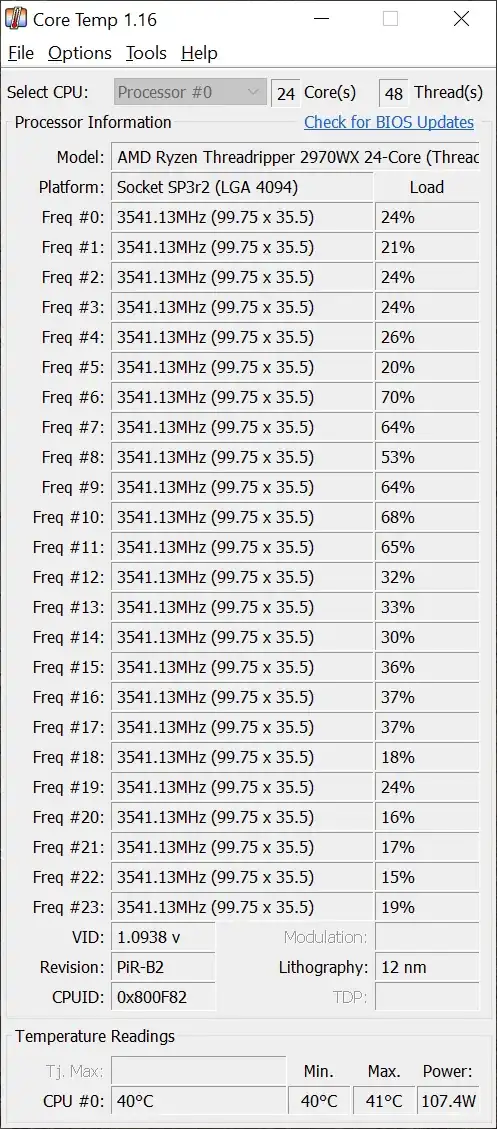

I considered whether it could be thermal throttling, but Core Temp (2nd screenshot) shows the temperature hovering around 40°C, which doesn't seem high to me.

UPDATE: I just discovered Cinebench, and ran it, and it immediately maxed out all 24 cores at 100% usage (and CPU temp reached 64°C). I guess that rules out thermal throttling. So why are Handbrake and Adobe Media Encoder (the main apps I need to be fast) apparently throttled?