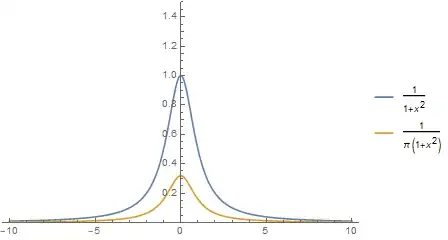

As shown at How does entropy depend on location and scale?, the integral is easily reduced (via an appropriate change of variable) to the case $\gamma=1$, for which

$$H = \int_{-\infty}^{\infty} \frac{\log(1+x^2)}{1+x^2}\,dx.$$

Letting $x=\tan(\theta)$ implies $dx = \sec^2(\theta)d\theta$ whence, since $1+\tan^2(\theta) = 1/\cos^2(\theta)$,

$$H = -2\int_{-\pi/2}^{\pi/2} \log(\cos(\theta))d\theta = -4\int_{0}^{\pi/2} \log(\cos(\theta))d\theta .$$

There is an elementary way to compute this integral. Write $I= \int_{0}^{\pi/2} \log(\cos(\theta))d\theta$. Because $\cos$ on this interval $[0, \pi/2]$ is just the reflection of $\sin$, it is also the case that $I= \int_{0}^{\pi/2} \log(\sin(\theta))d\theta.$ Add the integrands:

$$\log\cos(\theta) + \log\sin(\theta) = \log(\cos(\theta)\sin(\theta)) = \log(\sin(2\theta)/2) = \log\sin(2\theta) - \log(2).$$

Therefore

$$2I = \int_0^{\pi/2} \left(\log\sin(2\theta) - \log(2)\right)d\theta =-\frac{\pi}{2} \log(2) + \int_0^{\pi/2} \log\sin(2\theta) d\theta.$$

Changing variables to $t=2\theta$ in the integral shows that

$$\int_0^{\pi/2} \log\sin(2\theta) d\theta = \frac{1}{2}\int_0^{\pi} \log\sin(t) dt = \frac{1}{2}\left(\int_0^{\pi/2} + \int_{\pi/2}^\pi\right)\log\sin(t)dt \\= \frac{1}{2}(I+I) = I$$

because $\sin$ on the interval $[\pi/2,\pi]$ merely retraces the values it attained on the interval $[0,\pi/2]$. Consequently $2I = -\frac{\pi}{2} \log(2) + I,$ giving the solution $I = -\frac{\pi}{2} \log(2)$. We conclude that

$$H = -4I = 2\pi\log(2).$$

An alternative approach factors $1+x^2 = (1 + ix)(1-ix)$ to re-express the integrand as

$$\frac{\log(1+x^2)}{1+x^2} = \frac{1}{2}\left(\frac{i}{x-i} + \frac{i}{x+i}\right)\log(1+ix) + \frac{1}{2}\left(\frac{i}{x-i} + \frac{i}{x+i}\right)\log(1-ix)$$

The integral of the first term on the right can be expressed as the limiting value as $R\to\infty$ of a contour integral from $-R$ to $+R$ followed by tracing the lower semi-circle of radius $R$ back to $-R.$ For $R\gt 1$ the interior of the region bounded by this path clearly has a single pole only at $x=-i$ where the residue is

$$\operatorname{Res}_{x=-i}\left(\left(\frac{i}{x-i} + \frac{i}{x+i}\right)\log(1+ix)\right) = i\left.\log(1 + ix)\right|_{x=-i} = i\log(2),$$

whence (because this is a negatively oriented path) the Residue Theorem says

$$\oint \left(\frac{1}{1+ix} + \frac{1}{1-ix}\right)\log(1+ix) \mathrm{d}x = -2\pi i (i\log(2)) = 2\pi\log(2).$$

Because the integrand on the circle is $o(\log(R)/R)$ which grows vanishingly small as $R\to\infty,$ in the limit we obtain

$$\int_{-\infty}^\infty \frac{1}{2}\left(\frac{1}{1+ix} + \frac{1}{1-ix}\right)\log(1+ix) \mathrm{d}x = \pi\log(2).$$

The second term of the integrand is equal to the first (use the substitution $x\to -x$), whence $H=2(\pi\log(2)) = 2\pi\log(2),$ just as before.