In logistic regression we find the maximum likelihood estimator - $\max \prod_{i} p(y_i \mid x_i)$. Which in practice means maximizing the sum of log likelihoods. This makes sense, I understand MLE.

But... what is wrong with simply maximizing the likelihoods? In other words, if our model is a simple logistic regression on some dataset $(x_i\in \mathbb{R}^d,y_i \in \{-1,1\})$, I am asking about the difference between the following two optimization problems (with and without log):

$$\max_{W} \sum_{i : y_i = 1} \log \left(\frac{1}{1+\exp(-W^Tx_i)}\right) + \sum_{i : y_i = -1} \log \left(1 - \frac{1}{1+\exp(-W^Tx_i)}\right)$$

$$\max_{W} \sum_{i : y_i = 1} \left(\frac{1}{1+\exp(-W^Tx_i)}\right) + \sum_{i : y_i = -1} \left(1 - \frac{1}{1+\exp(-W^Tx_i)}\right)$$

I wrote some simple code for training a 2 parameter model (slope and intercept) with gradient descent and both methods work... except for two things:

- The first has a nicer loss curve, seems less sensitive to initialization, trains faster, etc.

- I know that nobody actually does the second

Could someone give both an intuitive explanation and a mathematical one? I have read various blog posts about "scoring rules" (e.g., https://yaroslavvb.blogspot.com/2007/06/log-loss-or-hinge-loss.html), but I still don't get it. What properties does log have in this context that make it better behaved? I understand all the connections between the log loss to cross entropy, MLE, etc.; what I don't understand is why the second option (no log) is bad. In a practical sense I have some vague intuition about log stretching the range of the loss from $[0,1]$ to $[-\infty, 0]$. I also have some intuition that accuracy (or error) should be thought of on a log scale because going from 80% to 90% accuracy is the same relative improvement as 90% to 95%. Anyways, sorry for rambling - I would like to have some stronger intuition and some formal math/stats to back this.

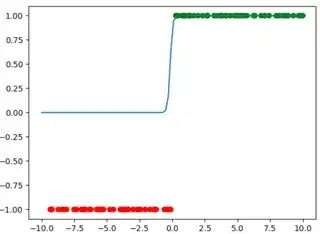

Here is the loss curve for the second (no log) optimization on some very simple separable data. Notice how long it gets stuck in a local minima. The other optimization finishes in very few steps. On the right is the data and output logistic model.

EDIT: It seems something similar has been asked before in different words but that question seems to ask about $p(x_i \mid W)$, meaning generative modeling not classification. The top answer is about how sum of probabilities corresponds to "at least one event is true" instead of "all events are true" but that doesn't seem to apply in my case. If nothing else, the global minima of both loss functions (with/without log) both correspond to perfect classification.

Also, one of the comments says "I don't like this kind of post, MLE is statistically justified, the sum of likelihoods is not". That's fair, but my goal here is to figure out how to explain things to a beginner. I just told them about linear regression and I would to be able to explain to them why one "obvious thing" in classification is bad, which is simply to express what you want as a loss (train a model of $p(y=1\mid x)$ to output high probability for positive labels and low probability for negative labels).