The logistic regression tends toward 0 or 1 because the log-odds of the outcome is linear in the predictor, which (for a positive coefficient, like in your data) causes it to grow without bound as the predictor gets larger, and vice versa when it gets smaller. This is analogous to how ordinary linear regression can produce unreasonable predictions when it extrapolates outside of the values commonly seen in the fitting data.

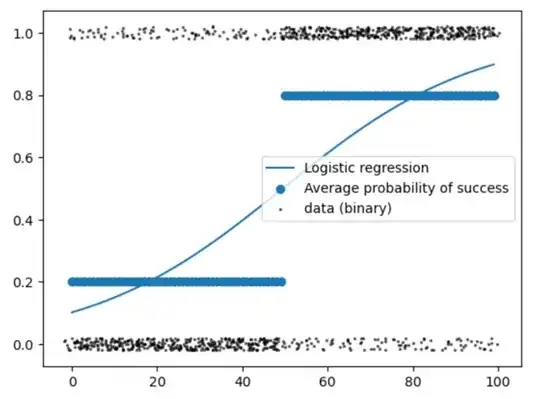

There are a variety of ways to handle this, but I think the best one is to use a generalized additive model (GAM). A GAM uses a set of flexible basis functions to develop an arbitrary smoothed curve for the linear predictor (i.e., the log-odds in a logistic regression). Here's an example that I whipped up from some data I had lying around.

In this case, the predictor has a "normal" range where the risk of the event is lower than average, and the log-odds adjustment (keep in mind that there is still an intercept term that sets the base rate) increases rapidly once you get out of that range. However, the effect saturates so that you don't get the virtual certainty of an event that you are seeing in your model. Interestingly, the risk saturates at a higher level for high values than for low.

The easiest way to fit GAMs is using the mgcv package for R. I will say that there is a bit of a learning curve for mgcv, so you need to be prepared to do some reading, but it's worth it, as in my experience GAMs produce a very reasonable model for a wide range of real-world phenomena.

aandb, @RIchardHardy's link is an answer (tl;dr adjust the link function to enforce the lower and upper bounds). If you want to estimateaandb, I could show how to do it with a general MLE estimation machine ... – Ben Bolker Jan 21 '24 at 00:58