I'm analyzing data from a study comparing 5 treatment arms. Women receiving ovarian stimulation for in vitro fertilization at different phases of the ovarian cycle. The treatment efficacy endpoint was the number of oocytes retrieved.

The hypothesis of the study is that stimulation can be started at any phase of the study, so that all treatments (whatever the phase) are equal? Can we say that this is an equivalence study?

Those who designed the study did not specify an equivalence margin a priori. Starting from an a posteriori equivalence margin of 0.5 oocytes, for example, we still won't be able to draw any conclusions from this study. Still maintaining the equivalence margin of 0.5. Are there any statistical tools that would enable us to reach the conclusion of rejecting the alternative hypothesis or accepting it without repeating the study? Are bootstrap or Bayesian methods an option?

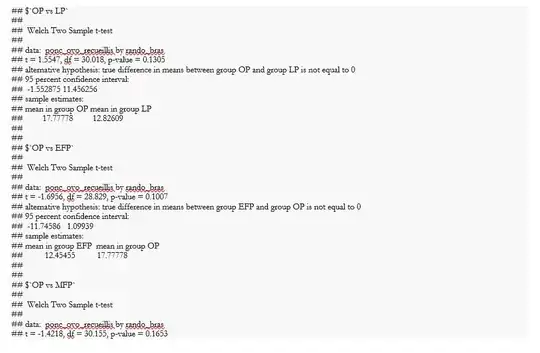

Here are some results illustrating the problem

with the non-normal distribution of observations, the statistical test is based on the comparison of medians. I haven't made the confidence intervals for the difference between the medians, but I have added those for the difference in means between each pair of treatments. Even if I calculate the confidence intervals for the difference between the medians, I don't think we'll be able to draw any conclusions.

with the non-normal distribution of observations, the statistical test is based on the comparison of medians. I haven't made the confidence intervals for the difference between the medians, but I have added those for the difference in means between each pair of treatments. Even if I calculate the confidence intervals for the difference between the medians, I don't think we'll be able to draw any conclusions.