I stumbled upon something interesting while attempting to do a log transformation for some data (with zeros) today. It seems that there must be a good reason for this that I'm just not seeing. I'm hoping somebody can explain this in an illuminating way. This post has some good answers, but doesn't quite get at this.

I encourage you to read the whole post before giving an answer, but the tldr is basically

If $y_i = \log(x_i+\epsilon)$ and $z_i = \frac{y_i-\bar y}{S_y}$, then the the log likelihood (assuming standard normals) $\sum_{i=1}^n\log\phi(z_i)$ seems to be invariant of $\epsilon$, where $\phi$ is the standard normal pdf.

The question, of course is why does this happen?

Edit

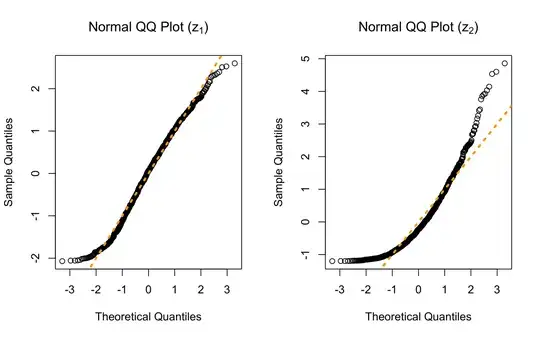

The excellent answer by @MattF does show why this happens. But to me, it's still curious that this occurs for the normal distribution but not for other distributions. Also, it seems obvious (from the plots for example) that the choice of $\epsilon$ makes a huge difference on the shape of the distribution and I assumed (incorrectly, apparently) that this would show up in the log likelihood evaluation. I feel like there is a deeper connection to be made, so I will wait a few days before accepting an answer.

For illustration let's make some data with a single problematic zero

set.seed(42)

X <- rgamma(1000, 1.2, 1.2)

m <- min(X)

X <- X - m

hist(X, breaks=30, freq=FALSE, xlim=c(-m, max(X)))

curve(dgamma(x+m, 1.2, 1.2), add=TRUE, lwd=2, col='orange')

Because of this zero, we can't do a log transformation of the data unless we add a constant, ensuring the shifted variables are positive $$y_i = \log(x_i + \epsilon).$$ How to choose $\epsilon$ becomes the natural question. Clearly, the choice of $\epsilon$ matters a great deal. To show this, lets consider two values $\epsilon_1=0.2$ and $\epsilon_2 = 10$.

eps1 <- 0.2

y1 <- log(X + eps1)

z1 <- (y1 - mean(y1))/sd(y1)

eps2 <- 10

y2 <- log(X + eps2)

z2 <- (y2 - mean(y2))/sd(y2)

hist(z1, breaks=30, freq=FALSE)

curve(dnorm(x), add=TRUE, lwd=2, col='orange')

hist(z2, breaks=30, freq=FALSE)

curve(dnorm(x), add=TRUE, lwd=2, col='orange')

qqnorm(z1, main=expression(paste("Normal QQ Plot (", z[1], ")")))

abline(0, 1, lwd=3, lty=3, col='orange')

qqnorm(z2, main=expression(paste("Normal QQ Plot (", z[2], ")")))

abline(0, 1, lwd=3, lty=3, col='orange')

Clearly the first value of $\epsilon$ is a better choice. To choose an "optimal value", I thought I would choose the value that maximizes the log likelihood $$\sum_{i=1}^n\log \phi(z_i).$$ To my surprise this sum is equal for all values of $\epsilon$, even though the terms of the sum vary drastically.

# LOG LIKELIHOOD FOR EPS1

> sum(log(dnorm(sort(z1))))

[1] -1418.439

LOG LIKELIHOOD FOR EPS2

> sum(log(dnorm(sort(z2))))

[1] -1418.439

THEY ARE EQUAL (UP TO MACHINE PRECISION)

> sum(log(dnorm(sort(z1)))) == sum(log(dnorm(sort(z2))))

[1] TRUE

Although we are now officially treading into "beyond the scope of the problem" waters, for curious parties, I found the value of $\epsilon$ that led to the best qq plot. This can be done with the following R code

qq_func <- function(eps, xx){

res <- rep(NA, length(eps))

for(i in seq_along(eps)){

yy <- log(xx + eps[i])

zz <- (yy - mean(yy))/sd(yy)

qq <- qqnorm(zz, plot.it=FALSE)

res[i] <- sd(qq$x-qq$y)

}

return(res)

}

eps_hat <- optim(1.5e-5, llfunc,

method="Brent", lower=0.1, upper=0.5,

xx=X)$par

curve(qq_func(x, xx=X), from=0.1, to=0.5, lwd=2,

xlab=expression(epsilon), ylab="|qq error|")

This gives an optimal value of $\epsilon = 0.2027$ which is what I'm using moving forward.

This gives an optimal value of $\epsilon = 0.2027$ which is what I'm using moving forward.

In summary... the main question is why is evaluation of the log likelihood invariant of $\epsilon$? Although it is secondary, I am also interested in alternatives to the qq plot based approach, but was unable to find any relevant literature or methodology.