So after thinking about this for a while, a friend suggested that this could be related to the bi-modality in the data (or rather, the fact that there is more than one "peak" in the density plot), because when he log-transformed the data he did not get AUC=0 (though still not close to 0.5).

To test this hypothesis, I synthesized a dataset drawn from a random bi-modal distribution, and I randomly assigned 'A' and 'B' labels such that there is no real group difference:

n <- 100

m <- 0

s <- 1

The degree of bi-modality is determined by delta (distance between peaks):

delta <- 5

d1 <- rnorm(n, m, s)

d2 <- rnorm(n, m+delta, s)

d_all <- c(d1,d2)

df_all <- data.frame(Subject = c(1:length(d_all)), Label = rep(c('A', 'B'), 0.5*length(d_all)), Value = d_all)

ggplot(df_all, aes(x=Value)) + geom_histogram(aes(fill=Label)) + theme_bw()

When I run LOO-CV on this random data, and plot the predicted scores (y-axis) against the original values (x-axis), I get a very weird pattern. While it is clear that there is no separability on the x-axis (original variable), we can draw a line that perfectly separates the two groups on the y-axis (logit scores):

And indeed, AUC=0 (or AUC=1, depending on the hypothesis; since my original one resulted in AUC=0, I will stick to that).

The degree of bi-modality is determined by the delta parameter. Here is the probability of perfect separability (AUC=0) depending on delta:

iterations <- 100

delta_values <- c(0:10)

n <- 100

m <- 0

s <- 1

df_AUC <- data.frame(Delta = rep(delta_values, each=iterations), Iteration = rep(c(1:iterations), length(delta_values)), AUC=NA)

create_dist <- function(d1, d2, delta) {

d_all <- c(d1, d2+delta)

df_all <- data.frame(Subject = c(1:length(d_all)), Label = rep(c('old', 'young'), 0.5*length(d_all)), Value = d_all)

df_all$Label <- as.factor(df_all$Label)

df_all

}

run_LOOCV <- function(df_all) {

df_all$prediction <- NA

for (sub in df_all$Subject) {

train_set <- df_all[df_all$Subject != sub,]

model_train <- glm(data=train_set, Label~Value, family='binomial')

sub_score <- predict.glm(model_train, newdata=df_all[df_all$Subject == sub,], type='link')

df_all$prediction[df_all$Subject==sub] <- sub_score

}

roc_model <- pROC::roc(df_all$Label, df_all$prediction, auc=T, percent=T, ci=F, plot=F, quiet=T, direction='<')

roc_model$auc

}

for (i in c(1:iterations)) {

#print(paste("Iteration:", i))

#start_time <- Sys.time()

d1 <- rnorm(n, m, s)

d2 <- rnorm(n, m, s)

for (d in delta_values) {

df_all <- create_dist(d1,d2,d)

df_AUC$AUC[df_AUC$Iteration == i & df_AUC$Delta == d] <- run_LOOCV(df_all)

}

#end_time <- Sys.time()

#print(paste('Time of iteration:', round(as.numeric(difftime(end_time, start_time, unit='m')),2), 'minutes.'))

}

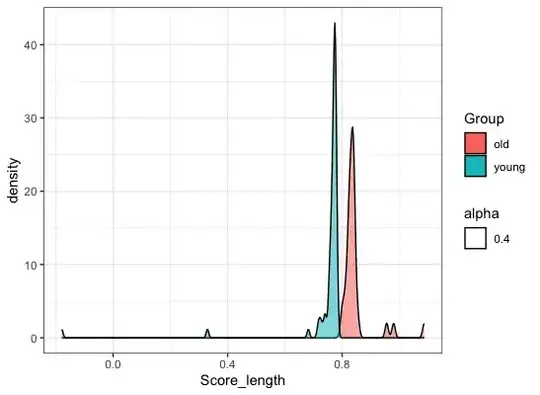

Iterating 100 times on random datasets, I plotted the probability of AUC=0. It does go up with more bimodality! The "danger" is smaller with multiple independent variables, or with a larger n, but it is still there (I tried, though I do not show the results here... Trust me).

What is the explanation of this?

The fitted logistic curve as a seesaw

The red points are "category 1", and the black points are "category 0". The y-axis is the probability of being in "category 1" (red). It is almost flat on 0.5, like a horizontal seesaw lying on a balanced random dataset, as there is really no effect. This is true whether data is binomial or not, and regardless of n size. However, this will change if we remove one point.

What happens in LOO-CV, when we exclude one data point?

If we think of the prediction line (gray) as a "seesaw", we can clearly see that with bi-modal data, each point has a high leverage and strongly affects the angle of the seesaw.

Let's focus on the right side of the seesaw. This side will be pushed up if we have more reds than blacks, and pushed down if we have fewer reds than blacks.

If we remove a red point, in one of the LOO-CV iterations, the seesaw will be pushed down. So when we predict the score of that red point, it will get a somewhat lower value than before, on the whole dataset when data was more balanced and seesaw was flatter. You can see the seesaw tilting down after removing one of these red points on the right side (gray line - balanced seesaw; black line - seesaw after leaving one right red point out):

The same rational only to the other direction works for the left side of the seesaw. Removing a red point will change the red/black balance and push the seesaw down, again resulting in lower scores for the red points.

Here is the code that generated this seesaw toy example:

n <- 10

m <- 0

s <- 1

delta <- 10

d1 <- rnorm(n, m, s)

d2 <- rnorm(n, m+delta, s)

d_all <- c(d1,d2)

df_all <- data.frame(Subject = c(1:length(d_all)), Label = rep(c('a', 'b'), 0.5*length(d_all)), Value = d_all)

df_all$Label <- as.factor(df_all$Label)

model_all <- glm(data=df_all, formula=Label~Value, family='binomial')

df_all$prediction <- predict(object=model_all, newdata=df_all, type='response')

all the red points on the right:

red_subs_right <- df_all$Subject[df_all$Value>5 & df_all$Label=='b']

df_sub <- df_all[df_all$Subject!=red_subs_right[1],]

model_sub <- glm(data=df_sub, formula=Label~Value, family='binomial')

df_sub$prediction <- predict(object=model_sub, newdata=df_sub, type='response')

flat seesaw (all data)

plot(prediction~Value, df_all, col=df_all$Label, ylim=c(0,1))

lines(prediction~Value, df_all, lwd=1, col='gray')

tilted seesaw (subset of the data)

lines(prediction~Value, df_sub, lwd=1, col='black')

Conclusion

Leave-one-out interferes with the balance of the seesaw, changing its tilt for each LOO iteration in a consistent way across data points of the same label, creating this weird result of greater or even perfect separability. But of course using this curve to predict real new data will be a disaster, because the tilt angle will be fixed and completely meaningless on a larger scale for data points that do not take any part in the fitting process.

The danger of bimodality for LOO-CV is mitigated by more variables (that are themselves perfectly normal) or a larger n, but it is still there. We might not get perfect separability but improved separability, and in any event the chances for that increase with the degree of bi-modality regardless of the number of variables in the model or of n. This could happen even if there is no bimodality (delta=0) - try it!

To conclude, when cross validating using leave-one-out, it is important to check the data if it is really normal. If not - chances increase that you get spurious separability on your predicted values!