Let $\mathcal{L}(\theta\mid x_1,\ldots,x_n)$ be the likelihood function of parameters $\theta$ given i.i.d. samples $x_i$ with $i=1,\ldots,n$.

I know that under some regularity conditions the $\theta$ that maximizes $\mathcal{L}$ converges in probability to the true value $\theta_0$ as the sample size becomes infinite (i.e., the MLE is consistent). However, this does not mean that the likelihood for other values of $\theta$ is $0$: it just means that all those other values will be less than the one attained at $\theta=\theta_0$.

My question is whether the likelihood actually becomes $0$ in the limit when $n \to \infty$ for all values $\theta \neq \theta_0$.

I suppose that in order for this to hold (if it does), some regularity conditions would be required (possibly the same ones required to claim that the MLE is consistent and asymptotically efficient). Feel free to consider just the cases in which such conditions are met.

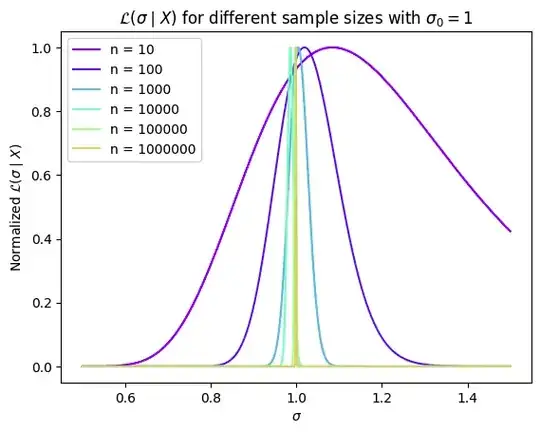

EDIT: Maybe the question is too general to be answered, so I want to specify that I am working with the covariance matrix of a multivariate Gaussian, nothing too fancy. In particular, for a one-dimensional random variable, I see that the behavior I described above is apparently true:

The problem is that I do not know how to describe this behavior mathematically. I mean, considering distributions like the Gaussian that meet the necessary regularity conditions, what does the likelihood function converge to when $n \to \infty$?