I was reading this article on Logistic Regression for Rare Events.

Over here, a modification ("Firth's Correction") to the classical likelihood function has been proposed in which a penalty term has been added based on the square root of the Fisher Information. As we know, the square root of the Fisher Information is closely related to the Jeffreys's Prior:

$$\mathcal{L}(\theta) + \frac{1}{2} \log\left|\mathbf{I}(\beta)\right|$$

I am trying to understand the logic as to why a penalty term was chosen that was based on the Jeffreys's Prior and why exactly it is useful for correcting biases associated with rare events.

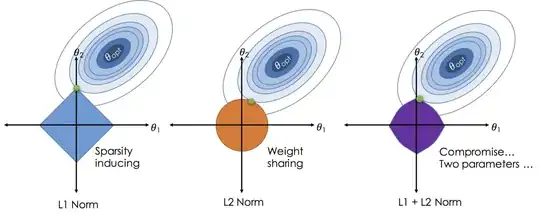

For instance, when it comes to Penalized Regression, I have read about penalty terms based on the L1 Norm and L2 Norms (e.g. LASSO and Ridge). Visually, I can understand why such penalty terms might be useful. The following types of illustrations demonstrate how such penalty terms serve to "push" regression coefficient estimates towards 0 and thereby might be able to mitigate problems associated with overfitting:

However, in the case of Firth's Correction, I am not sure as to how the square root of the Jeffreys's Prior is useful in correcting biases associated with rare events - mathematically speaking, how exactly is a penalty term based on the square root of the Jeffreys's Prior able to reduce biases associated with rare events? Currently, this choice of penalty seems somewhat arbitrary to me and I can't understand how it serves to reduce bias.