I have read that the Vuong test is no longer considered appropriate for testing whether a ZINB fits better than a negative binomial test because it is not strictly non-nested nor partially non-nested. I'm modeling in R (glmmTMB) and there is not the Vuong (nested) option that I can find. If the NB is nested in the ZINB (when ziformula=~0), is it ok to use the Chi-square goodness of fit test? My AIC is considerably lower for the ZINB vs the NB but what is the correct test for the two models? I do have theoretical reasons for why there would be structural zeros.

-

I hope you get a direct answer from an expert on asymptotic hypothesis tests, but in the mean time I thought I'd mention a bootstrap comparison between the zero-inflated and non-inflated AICs as a possibility. – John Madden Apr 13 '23 at 17:51

-

Thank you for the suggestion, John. However, from this post, I'm not sure what bootstrapping the AICs would do. https://stats.stackexchange.com/questions/610339/selecting-the-model-by-bootstrapping-aic-vs-log-loss – Claire Richards Apr 13 '23 at 18:14

-

Could you be a little more precise with your concern? I suppose I'm suggesting what Richard suggested in their answer in the linked question, which is to use "... bootstrapping, [to tell] you something about the variability in AIC.", and then to use this variability estimate to conduct a significance test. – John Madden Apr 13 '23 at 18:19

-

I guess I wasn't clear that the problem with using the chi-square has to do with variability or distributional assumptions? I am not following. I guess I worded my question as though I'm looking for statistical significance between AICs but is that what I need to prove ZINB is correct? – Claire Richards Apr 13 '23 at 18:38

1 Answers

(Do you really need to do a significance test if the difference in AIC is large and you have a theoretical justification for including zero-inflation? Why?)

tl;dr a parametric bootstrap test is probably the most straightforward solution to your problem; it's moderately computationally intensive (will take something like 2000x the computational effort of fitting your original model, although you could parallelize and might be able to whittle the time down further by using smarter starting parameter values ...)

library(glmmTMB)

m1 <- glmmTMB(count~mined, ziformula =~mined, Salamanders, family="nbinom1")

## fit null/reduced model (no zero-inflation)

m0 <- update(m1, ziformula = ~0)

## parametric bootstrap; simulate data from reduced model, fit with

## both full and reduced model, find diff(logLik)

simfun <- function() {

d <- transform(Salamanders, count = simulate(m0)[[1]])

logLik(update(m1, data = d)) - logLik(update(m0, data = d)))

}

## simulate a null distribution (SLOW)

set.seed(101); r <- replicate(1000, simfun())

## compute diff(logLik) for real data

obs_diff <- logLik(m1) - logLik(m0)

## compare graphically

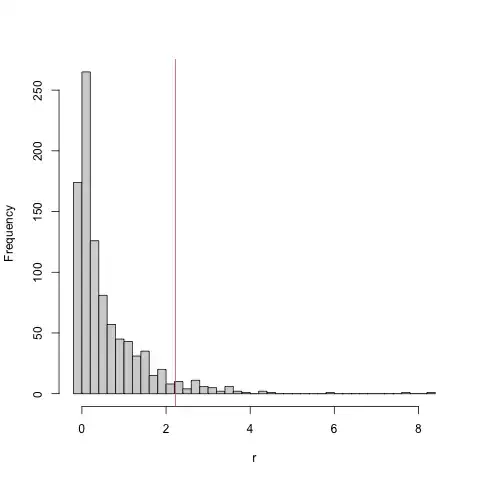

hist(r, breaks = 50); abline(v = obs_diff)

## p-value (proportion that test_stat(null) >= test_stat(observed))

mean(c(na.omit(r), obs_diff) > obs_diff)

[1] 0.05345912

Note there is a minor concern about eliminating NA values (47/1000 in this case) — these are probably cases where the estimated level of zero-inflation converged to 0 ($-\infty$ on the log scale); this will make the p-value slightly conservative.

Wilson (2015) goes into detail about why the Vuong test is inappropriate for testing the hypothesis of zero-inflation. For the record they propose some solutions, but none of these are (AFAICS) easily applicable to/available off-the-shelf in your case

Note that when the value of is allowed to be both positive or negative fitted values of do not “pile up” close to zero, and the distribution of zero-modification parameter is normal and hence a non-zero-inflated model is (strictly) nested in its zero-inflated counterpart, and hence a Vuong test for nested models could be used as a test of zero-inflation/deflation. Dietz and Böhning (2000) proposed a link function that allowed for zero-deflation, and more recently Todem et al. (2012) have proposed a score test that incorporates a link function that allows for both zero-inflation and deflation.

As for a "chi-square goodness of fit test": I'm not sure, but I think you mean a likelihood ratio test (whose test statistic, $2 (\log \cal L_\textrm{full} - \log \cal L_\textrm{restricted})$, is $\chi^2$ distributed under the null hypothesis)? This won't work either because it assumes that the null values of the distinguishing parameters (the zero-inflation probability in this case) are not on the edge of their feasible domain. That is, the derivation depends on a quadratic expansion around the null parameter value, and we can't expand around a value of $p_z = 0$ because that would involve negative probabilities ... this issue is mostly thoroughly described for testing the hypothesis that the random-effects variance is zero in a mixed model (see refs in this section of the GLMM FAQ), but the same issue applies here.

Roughly speaking, a likelihood ratio test of significance for a single parameter on the boundary will give a p-value that's twice as large as it should be (again, see refs in GLMM FAQ): this appears to be the case here, where the LRT p-value (from anova(m1, m0)) is 0.1084, very close to twice the parametric bootstrap result.

Wilson, Paul. 2015. “The Misuse of the Vuong Test for Non-Nested Models to Test for Zero-Inflation.” Economics Letters 127 (February): 51–53. https://doi.org/10.1016/j.econlet.2014.12.029.

- 43,543

-

thank you for your thorough response. I guess it is unsurprising that many people are still using both the likelihood ratio and the Vuong test. The reason that I would like to do a statistical test is just because of self-doubt. Zero-deflation in addition to zero-inflation is likely due to the data structure, though the hurdle model does not perform better or differently than the ZINB (nearly identical results). Also, the overall AIC is high, so is a 5000 AIC difference large if the overall AIC is 500,000? – Claire Richards Apr 13 '23 at 20:07

-

1I can answer your last point, but it would make a good follow-up question (if it hasn't already been answered somewhere on this site). (1) only differences in AIC matter, not 'absolute' values ... (2) an AIC difference of 5000 is so large that it makes me think that the data set is so big that it may need more a complex structure to avoid significant biases from model misspecification ... – Ben Bolker Apr 13 '23 at 20:22

-

1PS an AIC difference of 5000 is not even worth testing in any other way. It will correspond to a parametric bootstrap far too low to distinguish from zero in any reasonable-sized simulation ensemble. – Ben Bolker Apr 13 '23 at 20:23

-

Yes, it is longitudinal data over nearly four years. I hope my complex structure is already complex enough. – Claire Richards Apr 13 '23 at 20:47

-

Am I understanding correctly that basically a likelihood ratio test would be conservative, so it is more about type 1 error? So if you used it and it is significant, then you're ok, right? I understand that it's not necessary in my case but just to confirm my understanding. – Claire Richards Apr 14 '23 at 03:41

-

Yes, in general we would expect likelihood ratio tests to be conservative. – Ben Bolker Apr 14 '23 at 12:43