I have the following question. At first this seemed very silly but after thinking about it, I found my self struggling.

Given $X$, a random variable, I should decide if the following sentences are right or wrong and explain why:

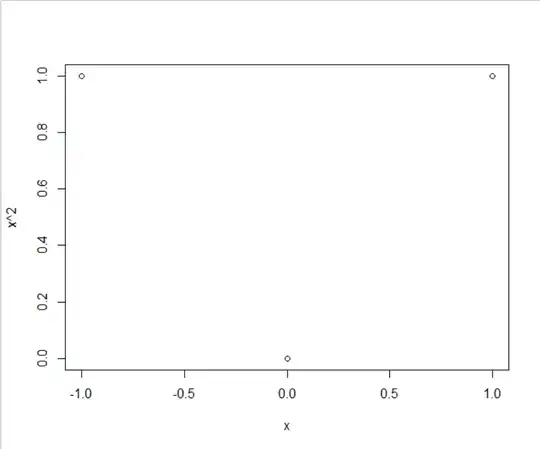

1 - $X$ and $X^2$ are always independent.

2 - $X$ and $X^2$ are never independent.

3 - $X$ and $X^2$ are always correlated.

4 - $X$ and $X^2$ are never correlated.

Here are my answers. I was able (I think) to answer dependency part, but the correlation part I couldn't.

1 - False: since $X^2$ could only be achieved with $X$ and $-X$, it is dependat with $X$.

2 - False: As said before, we can get $X^2$ using $-X$. This way $X^2$ and $X$ are independant.

3 & 4 I don't have a clue.

Could you guys help me with it? Did I answer questions 1 and 2 right? any explenation by words or via mathemtical approach would help.

Thank you!

self-studytag and read its tag-wiki info https://stats.stackexchange.com/tags/self-study/info. I couldn't follow your reasoning for 2 nor how that leads you to the answer you gave, maybe you should clarify that. For "always/never" type statements, identifying simple counterexamples is often a useful strategy. – Glen_b Feb 12 '23 at 16:07