This formulation of the question is presented as a way to expose the relevant concepts of separability and PCA.

Separability

Suppose $S$ and $X$ are sets and that $X = Y\cup Z$ partitions $X;$ that is, $Y\cap Z = \emptyset;$ and let $\mathscr F$ be a set of $X$-valued functions defined on $S.$

Let us say that a pair $\{A,B\}$ where $A\subset S$ and $B\subset S$ is $\mathscr F$-separable when there exists $f\in\mathscr F$ for which either $f(A)\subset Y$ and $f(B)\subset Z$ or $f(A)\subset Z$ and $f(B)\subset Y.$

(This language assumes $X=Y\cup Z$ is understood in the context. Otherwise, we would use a more specific expression like "$(\mathscr F, Y\cup Z)$-separable.")

Linear separability

Let $S=\mathbb W$ be a real vector space. Take $\mathscr F$ to be the set of translates of linear forms. That is, $f\in\mathscr F$ means $f$ is a real-valued function on $\mathbb W$ and there exists a scalar $b$ and a linear form (aka covector) $\phi$ for which $f(w)=\phi(w)+b$ for all $w\in\mathbb W.$ Thus $X=\mathbb R.$ Taking $Y$ to be the positive numbers and $Z$ to be the non-positive numbers is how linear separability is often defined. If your definition differs, you will want to pause here to verify that it's equivalent to this one.

PCA

Given a probability distribution $\Pr$ on a vector space $\mathbb W$ (together with other information: namely, an inner product), PCA finds a basis of $\mathbb W$ determined by that distribution. That's all we need to know about PCA for this question.

The question

Given samples $\mathscr A$ and $\mathscr B$ of a real vector space $\mathbb W,$ let $\Pr$ be the empirical distribution of the combined sample. (That is, it assigns equal weight to every vector in the combined sample. Such a distribution always exists provided the combined sample is not empty.) Let $A\subset \mathbb W$ be the support of $\mathscr A$ (the set of distinct values in the sample) and $B\subset \mathbb W$ be the support of $\mathscr B.$

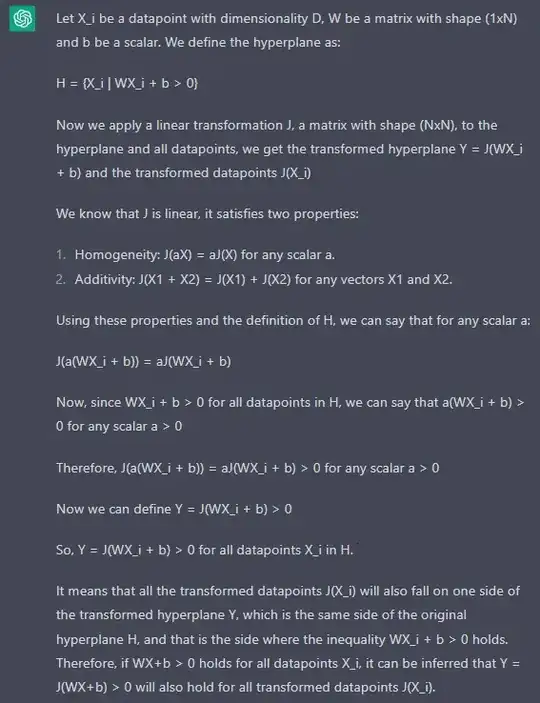

Prove that if $\{A,B\}$ is not linearly separable and you apply PCA to the combined sample $(\mathscr A,\mathscr B),$ then $\{A,B\}$ is still not linearly separable.

There is nothing to show.

The reason is that the linearity of a form $\phi$ does not depend on choosing a basis for a vector space. How is this standard fact proven? By showing that (1) a change of basis effects a linear transformation of coordinates and (2) composing such a linear transformation with a linear function is still a linear function. If you feel you need to demonstrate this to satisfy your professor, that would be fine.

You might be working with a slightly different concept of PCA in which only the first few principal vectors are retained. By using only a few of the principal vectors you are limiting the set of linear forms in $\mathscr F$ you are willing to use after applying PCA (you have thrown away all those defined on the unused principal vectors and vanishing on the selected principal vectors). That only makes it harder to achieve separability after applying PCA.