I am trying to calculate whether the difference between the two benchmarks is statically different or not.

The input is req/sec of a HTTP Server and I'm using scipy.stats.ttest_ind to calculate the p-value.

A1 = [

4670, 4646, 4612, 4618, 4646,

4609, 4623, 4629, 4566, 4628,

4582, 4636, 4621, 4574, 4624,

4563, 4651, 4642, 4586, 4621,

4606, 4628, 4575, 4631, 4646,

4600, 4594, 4661, 4568, 4611

]

B1 = [

4630, 4655, 4652, 4633, 4637,

4661, 4625, 4680, 4647, 4639,

4633, 4661, 4638, 4621, 4630,

4682, 4703, 4665, 4652, 4648,

4673, 4651, 4669, 4646, 4612,

4654, 4651, 4619, 4637, 4620

]

st.ttest_ind(A1, B1)

Ttest_indResult(statistic=-4.855056212284194, pvalue=9.47100493260572e-06)

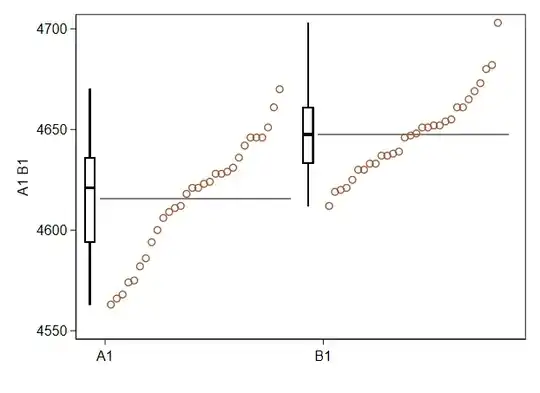

Why the value is 9.47100493260572e-06? I was expecting to see a value bigger than 0.05 because the input is pretty similar and the means are relatively close, too: 4615 vs 4647

Am I missing something?