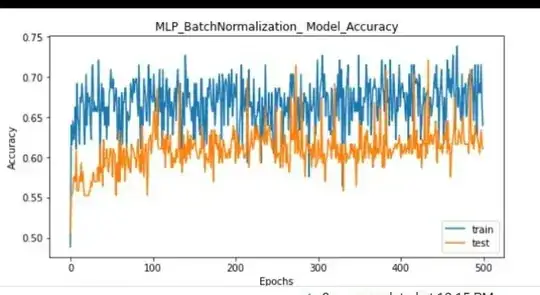

I have a binary classification problem with Dataset N430 and predictors=146. Both Validation and training accuracies along with losses fluctuates. What would be the reason and suggest solution please?

I have a binary classification problem with Dataset N430 and predictors=146. Both Validation and training accuracies along with losses fluctuates. What would be the reason and suggest solution please?

Asked

Active

Viewed 124 times

1

Asif Munir

- 11

-

Posting your code as figures is not helpful because people can't copy and paste it to try to reproduce it. If you write the code in a code block, your chances of receiving help will increase greatly. – Jxson99 Aug 19 '22 at 18:39

-

Does this answer your question? What should I do when my neural network doesn't learn? – Stephan Kolassa Aug 19 '22 at 20:07

1 Answers

0

This question can be a little difficult to answer, as there are many reasons you could see this kind of behavior, but I'll note a couple of issues and a couple of possible solutions.

- The model is clearly over-fitting. The binary-cross entropy of your train data goes to virtually zero after only a couple of epochs, while your test entropy sky-rockets. This immediately suggests over fitting. Additionally, your test accuracy doesn't increase much.

- momentum and other optimization techniques lead to "fluctuation" Because of the way we add momentum to models, even if they reach a local minima, they will still explore "uphill" around the local minima to make sure there isn't a better direction to head. This (as well as a few other parameters related to mometum) is probably why you see this kind of fluctuation. In other words, it doesn't mean much, your model is just continues to attempt to improve, but isn't making any progress.

Okay, suggestions for improvement.

- Accept the results. Deep learning, despite popoular belief, can't solve everything. It may be this is just how good the model is based on the problem space.

- Try to regularize the model. As I stated before, you are clearly overfitting. You could try various forms of regularization through means such as drop out, or l1, or l2 regularization. You might also need to fundamentally change the model structure. Right now you only have one dense layer. This limits the patterns your model can find to linear boundaries, stacking two smaller dense layers may help your model find better solutions.

Tanner Phillips

- 1,325