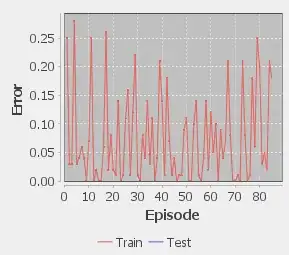

As a project I've built a graphical neural network sandbox software, which has the feature of drawing a loss graph to show how well the network is learning during training. I'm receiving these really odd results shown below though, and I can't figure it out.

The loss decreases over time telling me it is learning, however eventually it tends to reverse and increases in loss as I feed it more samples. These are all samples used in training by the way, so it's not just overfitted. The samples are also well shuffled.

Is there a reason a pattern like this can occur? Or could it be caused by something wrong with my software, such as my implementation of backpropagation?

A learning rate of 0.1 causes it to happen later (around the 80 sample mark), and oddly a learning rate of 0.01 causes it to happen sooner (around the 50 sample mark)

– Lemniscate Jun 06 '22 at 16:01I'm feeding it a 3 dimentional regression problem, I am giving it X and Y as inputs for it to learn to predict Z.

– Lemniscate Jun 06 '22 at 16:56