In his celebrated talk on randomness and pseudorandomness

https://youtu.be/Jz1UoAWD80Q?t=366

legendary mathematician Avi Wigderson makes the powerful statement that sampling is perhaps the most important use of randomness.

He skips over this rather elementary slide quickly but I am trying to understand the numbers behind it, using weak law of large numbers or Chebyhsev's inequality but I am not sure if I am getting it.

He suggests 2000 (i.i.d) samples would predict the result of an election in a 280 million population with +/-2 percent with 99% probability, he relates this to the weak law of large numbers but I can't see a concrete connection.

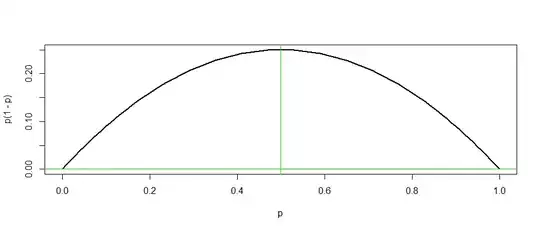

1/Sqrt(2000) is about 2% - which is about another thing that I never understand: why does error in direct sampling go as 1/Sqrt(N)? My feeling is error in the standard variation should go as 1/Sqrt(N) but error in the mean should scale as 1/N.

Apologies in advance, if these are too simple questions!