I have a synthetic measurement model that looks like this,

$$ x(t) = e^{j u t \frac{4}{\lambda}}, $$ $\lambda$ is a constant.

$$ z(t) = x(t) + n(t) $$

The quantity $j = \sqrt{-1}$, the imaginary unit. The noise $n(t)$ added to this signal has two components as well, one real and one imaginary part.

Where $n$ is a zero mean white Gaussian noise with a variance of $\sigma_n^2$. $$ n = \frac{1}{\sqrt{2}} \left( \mathcal{N}(0, \sigma_n^2) + j \mathcal{N}(0, \sigma_n^2) \right) $$

From this measurement model, I take only some measurements for analysis of the MCMC method.

I want to estimate the value of $u$ from this model. The noise $n$ has both a real and an imaginary part. I assume that the noise variance $\sigma_n$ is known already.

I use a Gaussian prior for $u$. The parameter $u$ is not complex; it is a real quantity.

$$ p(u) = \mathcal{N}(\mu_u, \sigma_u) $$

The likelihood I take as,

$$ \log(p(z|u)) = -\frac{1}{2} (\mathbf{z} - \mathbf{x})^T K^{-1} (\mathbf{z} - \mathbf{x}) $$

Where $\mathbf{z}$ and $\mathbf{x}$ are vectors with the dimension of $t$. The value $K$ is defined as,

$$ K = \sigma^2 \times I, $$ $I$ is the identity matrix. So, $K$ is a real matrix dealing only with the known noise variance.

I separately compute the real and imaginary parts of the likelihood as,

$$ \log(p(z_{Real}|u)) = -\frac{1}{2} (real(\mathbf{z}) - real(\mathbf{x}))^T K^{-1} (real(\mathbf{z}) - real(\mathbf{x})) $$

$$ \log(p(z_{Imag}|u)) = -\frac{1}{2} (imag(\mathbf{z}) - imag(\mathbf{x}))^T K^{-1} (imag(\mathbf{z}) - imag(\mathbf{x})) $$

The problem I face is that the likelihood not only has a real, but also has a imaginary component. In the acceptance criteria of Matropolis Haistings algorithm, I now add the real and imaginary likelihoods in log scale. Code is given later in this post.

If the ground truth has $1000$ samples, only $50$ samples are available as measurements. The samples are placed in a way that there is a definite gap between sets of samples. For example, $5$ samples placed together in time $t$, there is a huge time gap of $95$ sample space and again another $5$ samples are available. The samples in time $t$ available can be represented as this.

$$t = N.k.dT + \sum_{i = 1}^{M}i.dT$$

Here, $N = 100$, $M = 5$ and $k \; \epsilon \; [0, 1, 2, ....]$.

A working example is given below.

%% HD signal generator

close all;

clear;

SNR_db = 20; % Noise is added with a given SNR in dB

SNR = 10^(SNR_db/10); % SNR in linear scale

lambda = 1;

r0 = 0;

u = 1; % Ground truth u

N = 100;

M = 5;

K = linspace(1, 10, 10);

Nt = K(end) * N; % Number of Truth samples (N * M * 20)

dT = 0.1; % t step

r(1) = r0;

Z(1) = exp(1j * 4 * pi/lambda .* r(1));

for i = 2:Nt

r(i) = r(i - 1) + u * dT;

Z(i) = exp(1j * 4 * pi/lambda .* r(i)); % Ground truth samples

end

Noise = sum(abs(Z).^2)./(Nt .* SNR); % Finding Noise power and ...

%noise variance with the data and given SNR

sigma_n = sqrt(Noise);

Z_model = Z + sigma_n .* (randn(1, Nt) + 1j .* randn(1, Nt))./sqrt(2); % Adding complex noise

%% Available samples [Measurement model with only a few samples]

Z_model_re = reshape(Z, [N K(end)]);

Z_avail = Z_model_re(1:M, :);

Z_avail_vec_ = reshape(Z_avail, [M * K(end) 1]); % available samples for measurements

Z_avail_vec = Z_avail_vec_ + sigma_n .* (randn(1, length(Z_avail_vec_)).'+ 1j .* randn(1, length(Z_avail_vec_)).')./sqrt(2);

for k = 1:K(end)

t(:, k) = (k - 1) * N + [1:M]; % This for loop calculates the t instances of the available samples

end

t_avail = reshape(t, [length(Z_avail_vec) 1]) .* dT; % vectorize the available time instances

%% MCMC parameters in a structure var

% E is the structure having options for MCMC

E.n = 1; % Number of variables to be estimated

E.E0 = [1.3]; % Initial value of u

E.sig = [50000]; % Initial value of the Std of the prior of u

%% This section has MCMC algorithm

No_iter = 10000; % Number of iterations

[accepted, rejected, itern, E_new] = MHu(E, No_iter, Z_avail_vec, t_avail, r0, sigma_n);

%% Plot MCMC outputs

for i = 1:E.n

figure(1000+i);plot(rejected(:, i)); hold on; plot(accepted(:, i)); % Accepted and rejected values

burnin = round(0.25 * length(accepted(:, i))); % 25% of the data is taken as burnin

figure(2000+i); histogram(accepted(burnin+1:end, i), 100); % histogram of accepted

burninrej = round(0.25 * length(rejected(:, i))); % Burnin for rejected

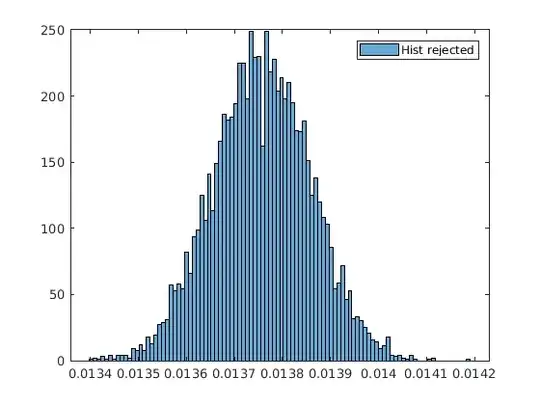

figure(3000+i); histogram(rejected(burninrej+1:end, i), 100); % Burnin for accepted

mu_re = mean(accepted(burnin+1:end, i)); % Mean of accepted

rej_re = mean(rejected(burnin+1:end, i)); % Mean of rejected

end

function [accepted, rejected, itern, E] = MHu(E, iter, data, t_avail, r0, sigma_n)

%% Inputs:

% E - Struct of MCMC options

% iter - number of iterations

% data - data available

% t_avail - t instances of the data

% r0 - start position of the target - assumed to be known

% sigma_n - noise standard deviation - assumed to be known

%% Outputs:

% accepted - accpetd values

% rejected - rejected values

% itern - iterations at which we accept a value

% E - the new Struct of MCMC options after processing - A new E.sig is

% updated

u = E.E0; % save the initial value in u

accepted = zeros(1, E.n);

rejected = zeros(1, E.n);

itern = 0;

for i = 1:iter

disp(i);

[u_new, punew, pu] = TMu(u, E.sig); % u_new is a new sample drawn for u

% prior probability of u_new

% prior probability of u

u_lik = LLu(u, data, t_avail, r0, sigma_n, pu); % Likelihood of u wth data

u_new_lik = LLu(u_new, data, t_avail, r0, sigma_n, punew); % Likelihood of u_new with data

%% Acceptance Logic

if (acceptance((u_lik), (u_new_lik)))

accepted = [accepted; u_new];

itern = [itern i];

u = u_new;

else

rejected = [rejected; u_new];

end

end

end

function [unew, punew, pu] = TMu(u, Esigma)

for i = 1:length(Esigma)

unew(i) = normrnd(u(i), Esigma(i), 1); % Draw a new value

pu(i) = normpdf(u(i), u(i), Esigma(i)); % Find the prior probability of x

punew(i) = normpdf(unew(i), u(i), Esigma(i)); % Find the prior probability of y

end

end

function ret = LLu(u, data, t_avail, r0, sigma_n, pu)

lambda = 0.03;

r(1) = r0; % initial position of the scatterer

Z_model(1) = exp(1j .* 4 .* pi/lambda .* r(1)); % First sample echo model

for l = 2:length(t_avail)

r(l) = r(l - 1) + u .* (t_avail(l) - t_avail(l - 1));

% This for loop calculates the model

% samples from the available t steps $t_avail$

Z_model(l) = exp(1j .* 4 .* pi/lambda .* r(l));

end

Nt = length(data);

K = eye(Nt, Nt) .* sigma_n.^2; % Noise diagnoal matrix with size length(t_avail) x length(t_avail)

%% Real and imaginary part likelihood

ret_re = log(pu) -Nt/2 .* log(2*pi) - 1/2 * (real(data) - real(Z_model).').' * inv(K) * (real(data) - real(Z_model).');

ret_im = log(pu) -Nt/2 .* log(2*pi) - 1/2 * (imag(data) - imag(Z_model).').' * inv(K) * (imag(data) - imag(Z_model).');

ret = ret_re + ret_im; % Total likelihood (multiplication of real and imaginary likelihoods in linear scale)

end

function ret = acceptance(u, u_new)

alpha = exp(u_new - u);

rnd = rand;

if alpha > rnd

ret = 1;

else

ret = 0;

%accept = rand;

%ret = (accept < exp(x_new - x));

% ret = 0;

end

end

Total truth samples: 1000 Total measurement samples: 50 True u : 1 (as per the code above)

I start with a very big proposal standard deviation (E.sig in the code as 50000). The problem is that if I run $10000$ iterations, only a very few samples are accepted like only $10$. It doesn't converge. As the E.sig is super high, does it take a long time to converge? If you see the rejected values, it seems to oscillate at some point with a constant mean and feels like it is stuck at a local minima probably?

The plot of accepted and rejected values are shown below. Here, the accepted values are only 12 so they are not visible. The pattern for the rejected values kind of gives an impression that it is unable to reach a good value for $u$. So, I feel the final value of accepted values could be a local minima and getting out of this point is harder for the algorithm. I have put a histogram of rejected and accepted values as well. The final value of $u$ is $6.26 \times 10^{4} $ and it occurs at iteration number $4878$ which is much before $10000$.

Next I show a case with E.sig = 1. However, in a practical scenario, one would like to start at a higher value. Is there a way to avoid this? Here also the acceptance rate is so low (only 10 out of 10000).

UPDATE: With answer 2

I have tried the values of the second answer.

$\lambda = 1$, $u_{true} = 0.01$, $\sigma_n^2 = 0.01$, $dt = 0.1$. Start value of $u_{start} = 0.014$ and the proposal distribution of $u$ has a standard deviation of $u_{std} = 0.0001$. The results are below.

I have $110$ accepted values out of a $10000$ iterations.

I also plot a likelihood in log for various values of $u$ as in the second answer. It looks similar but not smooth.

randinstead of two. – Sextus Empiricus Apr 20 '22 at 09:45real(p(z, u_2))and that seems like you take the real part of the complex function, but that is different from dealing with the real and imaginary part of $z$ seperately. – Sextus Empiricus Apr 20 '22 at 10:37pknow that you are dealing with the real part or the imaginary part? It is namely not just the vecyor $z$ that you take the real/imaginary part of, but also the vector $x$.... – Sextus Empiricus Apr 20 '22 at 11:49E.sig. Your histogram shows proposals in the order of $10^5$ which is due to that largeE.sigand that, of course, results in a lot of rejections because you are sampling in a region with low probability density. (with a smallerE.sigthe problem will be different). – Sextus Empiricus Apr 22 '22 at 10:08E.sig. I know for a likelihood having a very low periodicity,E.sighas to be really small and the starting point also has to be closer to the actual solution. I want to avoid it. I want to start at a random point and converge to the global maxima. So, that is why I keptE.sigtoo high just to check if it comes out of local maxima points. Sadly, probably it needs like infinite iterations or so. – CfourPiO Apr 22 '22 at 10:18