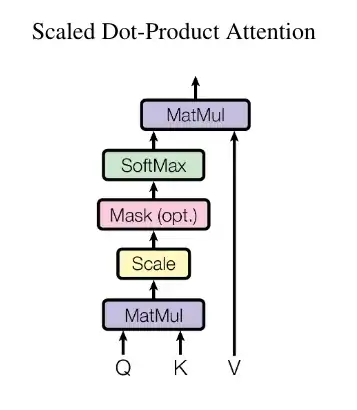

In the scaled dot product attention they multiply a "softmaxed" matrix (which has shape (sequence_length, sequence_length) I think?) to the V matrix as shown

What does the second purple matmul actually scale explained in an algebra fashion?

Also if I have a matrix a of shape (home_count, furniture_type_count) to store the furniture I need for every home. And a matrix b of shape (store_count, furniture_type_count) to store the price for every furniture at every store. Then a*transpose(T) gives the total price I need to pay if I buy all furniture at each store if I'm not wrong. But when building some layers in deep learning it gets very hard to understand what that multiplcation actually does. Is there a good way to understand such operations? For example how to explain the purple matmul scale mechanism used in the attention mentioned above?

EDIT : By 'scale' in the picture I meant 'weight'

the first multiplies query Q with keys K to produce attention weightsSorry I wasn't being too clear but what I said about 'scaling' actually meant 'weighting'. I wanted to ask in which dimension does this weighting occur? Does it apply different weight along the sequence or in just weight different features for the entire sequence or both? – IKnowHowBitcoinWorks Apr 04 '22 at 09:30