I have created two multiple linear models of the same data. The two models vary only in the dropping of a few variables.

Model_A includes all X independent vars (A,B,C,D):

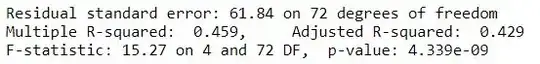

Model_B includes only A,B as C,D were found to be insignificant:

As you can see the F-statistic has decreased but the adjusted R^2 has decreased. My understanding is that F and R should increase concurently. Can anyone explain these results?