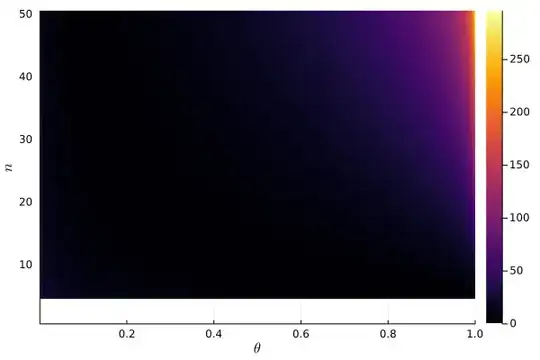

I'm trying to make sense of the following plot portraying the joint negative log-likelihood for the Binomial model $\mathrm{Binomial}(n,p)$ with unknown $n$ and $p$ and $y=5$. Note that the white part indicates a negative log-likelihood equal to minus infinity due to the fact that $n$ must be at least $y=5$. Also, I put an arbitrary cutoff at $n=50$.

The joint likelihood (still evaluated at $y=5$) has a clear maximizer while the negative log-likelihood has not.

Is it correct to say that this is the case because the joint likelihood is not log-concave?

Julia code to reproduce the plots above.

using Plots, StatsPlots, Distributions, LaTeXStrings, Random

Random.seed!(1994)

joint likelihood of n ad θ

begin

ns = 1:50

θs = range(0., stop=1., length=1000)

A = zeros(length(ns), length(θs))

for (i, n) in enumerate(ns), (j, θ) in enumerate(θs)

A[i, j] = pdf(Binomial(n, θ), 5)

end

heatmap(A, xlabel=L"\theta", ylabel=L"n", xformatter=x->"$(x/length(θs))")

savefig("plots/joint_likelihood.png")

end

joint negative log-likelihood of n ad θ

begin

ns = 1:50

θs = range(0., stop=1., length=1000)

A = zeros(length(ns), length(θs))

for (i, n) in enumerate(ns), (j, θ) in enumerate(θs)

A[i, j] = -loglikelihood(Binomial(n, θ), 5)

end

heatmap(A, xlabel=L"\theta", ylabel=L"n", xformatter=x->"$(x/length(θs))")

savefig("plots/joint_neg_loglikelihood.png")

end