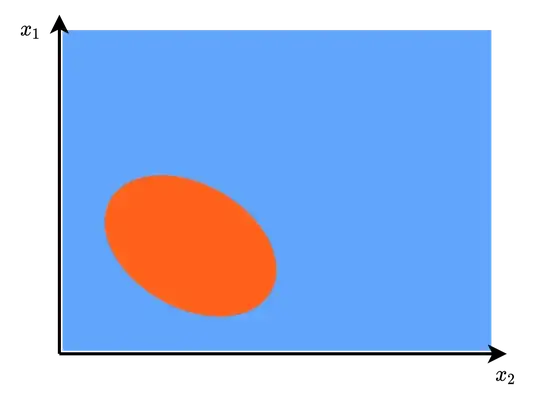

Suppose you are trying to perform two-class classification on faulty and non-faulty machinery data, where each example in the dataset is represented using the feature vector $\mathbf{x} = [x_1 \ x_2]^T$. This could be done as shown below:

Here, the faulty machinery data is represented by the orange area, and the non-faulty machinery data represented by the blue area. Suppose that the machines in question are rarely ever faulty, such that there are so many different examples of non-faulty machinery, but very few examples of faulty machinery. Given that you have trained on the data shown above, it is possible that you observe an example of non-faulty machinery that you mis-classify as faulty:

Again, the reason that this happens is because the set of all possible feature vectors representing non-faulty machinery is just too big. It is not possible to capture all of them and train on them. You could argue that you just need to collect more data and train on that, but what you would end up doing is this:

This, by the way, can be done using a neural network, for example, and the act of drawing these lines is the basic idea behind discriminative modelling.

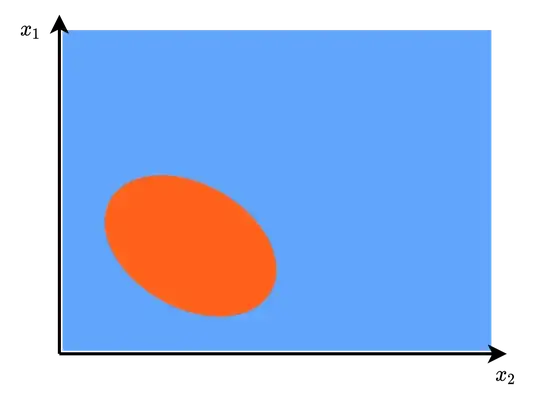

However, why bother collecting so much data and creating a very complex model that can draw all of these lines, when you can just try to draw the shape of the faulty data like this?

In the figure above, the orange area is the faulty machinery data that you collected, while the rest of the feature space is assumed to represent non-faulty machinery. This is the basic idea behind generative modelling, where, instead of trying to draw a line that splits the feature space, you instead try to estimate the distribution of the faulty data to learn what it looks like. Then, given a new test vector $\mathbf{x}$, all you need to do is to measure the distance between the center of the distribution of the faulty data and this new test vector $\mathbf{x}$. If this distance is greater than a specific threshold, then the test vector is classified as non-faulty. Otherwise, it is classified as faulty. This is also one way of performing anomaly detection.