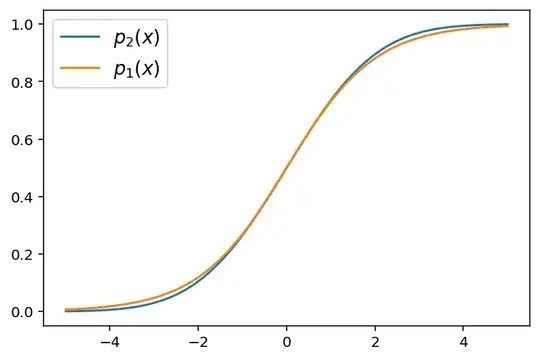

Imagine an experiment, in which an observer has to discriminate between two stimulus categories at different contrast levels $|x|$. As $|x|$ becomes lower, the observer will be more prone to making perceptual mistakes. The stimulus category is coded in the sign of $x$. I'm interested in the relationship between two different ways of modeling the observer's "perceptual noise" based on their choices in a series of stimulus presentations.

The first way would be to fit a logistic function

$ p_1(x) = \frac{1}{1+e^{-\beta\cdot x}} $

where $p_1(x)$ is the probability to choose the stimulus category with positive signs ($S^+$). Here, $\beta$ would reflect the degree of perceptual noise.

A second way would be to assume that the observer has Gaussian Noise $\mathcal{N}(0,\sigma)$ around each observation of $x$ and then compute the probability to choose $S^+$ by means of the cumulative probability density function as follows:

$ p_2(x) = \frac{1}{\sigma\sqrt{2\pi}}\int\limits_{-\infty}^{x}e^{-\frac{z^2}{2\sigma^2}} dz $

In this case, $\sigma$ would be an estimate of the perceptual noise.

I have a hunch that both these approaches are intimately related, but I'm not sure how. Is it an underlying assumption of the logistic function that the noise is normally distributed? Is there a formula that describes the relationship between $\beta$ of $p_1(x)$ and $\sigma$ of $p_2(x)$? Are, in the end, $p_1(x)$ and $p_2(x)$ essentially identical and could $p_1$ be derived from $p_2$?