Preliminary comments

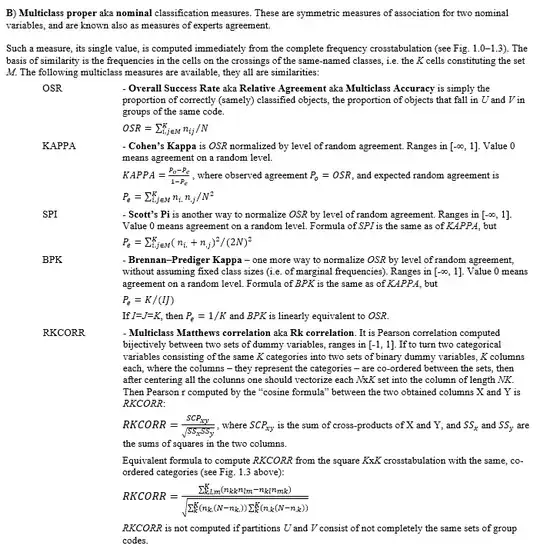

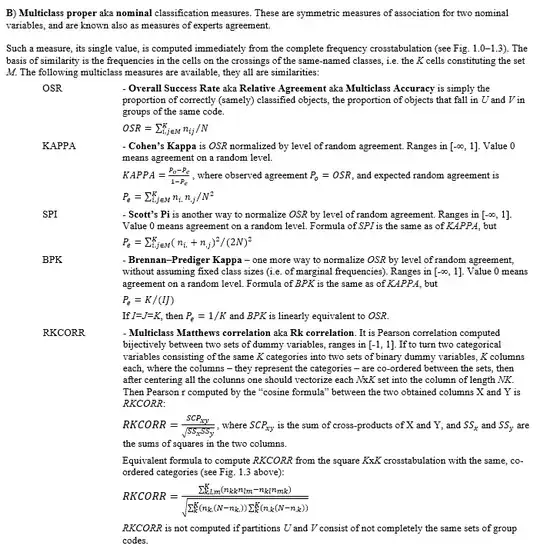

Cohen's Kappa is a multiclass classification agreement measure. It is Multiclass Accuracy measure (aka OSR) "normalized" or "corrected" for the chance agreement baseline. There exist other alternatives how to do such "correction" - for example, Scott's Pi measure. Below is an excerpt from my document describing my !clasagree SPSS macro, a function calculating many different measures to assess/compare classifications (the complete Word document is found in "Compare partitions" collection on my web-page). The excerpt below displays formulae for the currently available multiclass classification measures:

Accuracy as it is is a Binary or Class-specific classification agreement measure. It is computed not for all classes at once but for each class-of-interest. Having been computed for each class k of the K-class classification, it then can - if you wish - be averaged over the K classes to yield a single value. Below is again a portion of my aforementioned document now introducing some of a lot of binary classification measures:

Now to note: When K=2, that is, there are only two classes, then the average binary Accuracy is the same as the Multiclass Accuracy (aka OSR). In general, when K, the number of existing classes, is the same in both classifications being compared, mean binary Accuracy and Multiclass Accuracy (OSR) are linearly equivalent (correlate with r=1).

Answer to the question

The following simulation experiment refutes the notion that Accuracy and Cohen's Kappa are monotonically related. Because your question is narrowed to K=2 class classification, the simulation creates random classifications with only two classes each. I thus simply generated independently 101 2-value variables. One of the variables I arbitrarily appointed to represent "True" classification and the other 100 to be alternative "Predicted" classifications. Because I did not generate the variables as correlated, the 100 classifications can be seen just random, blind "classifiers". I could have made them better than random by generating positively correlated variables - but that wouldn't drastically change the conclusion. I did not fix marginal counts, so classes were let to be moderately unbalanced.

The results of the comparisons of every of the 100 randomly built classifications (clas1 - clas100) with the "True" classification are below:

Binary Accuracy measure, after averaging its two values, is equal to Multiclass Accuracy (OSR), as was remarked earlier. Values of Kappa are generally low - but that is because our "classifiers" were on the average not better than random classifiers. The scatterplot of Kappa vs Accuracy:

As you see, there is no any monotonic functional relation; hardly even any correlation at all. Conclusion: One should, generally, not expect that "the model with higher accuracy will also have a higher Cohen's Kappa, i.e. better agreement with ground truth".

Addition. I also generated 100 classifications that are better than random classifiers w.r.t. the "True" classification. That is, I generated 2-value variables, as before, now positively correlated (with some random $r$ ranging within 0.25-1.00) with the "True" classification variable. The scatterplot of Kappa vs Accuracy from this simulation:

As seen, only at very high levels of agreement between a Predicted and the True classification the relation between Kappa and Agreement approaches monotonicity.