I am training a simple feed forward neural network in Keras, to perform binary classification. Dataset is unbalanced, with 10% of class 0 and 90% of class 1, so I was adding a class_weight parameter to create a better model.

I divided dataset into train, eval and test subsets, to have honest results and added a callback that calculates auc on eval subset, a callback for early stopping and callback for saving best model.

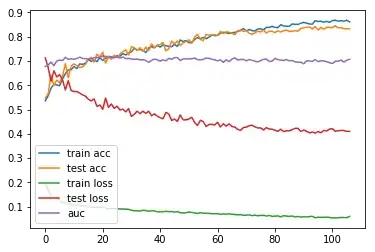

However I cannot see overfitting, because val auc and test auc are similar, the test loss and val loss are not.

When training without class_weight set results are slightly worse, but the train loss and test loss are similar.

I know when the classes are balanced, baseline loss is about 0.69, and for my case when not using class weights it would be 0.33, based on: What's considered a good log loss?

I am posting my code below.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0, stratify=y)

X_train, X_val, y_train, y_val = train_test_split(X_train, y_train, test_size=0.2, random_state=0, stratify=y_train)

# X_train : 10000, X_val: 2500, X_test: 3000

class_weight = {0: np.mean(y),

1: 1 - np.mean(y)}

# {0: 0.89728767, 1: 0.10271233320236206}

model = Sequential()

model.add(Dropout(0.5, input_shape=(X_train.shape[1],)))

model.add(Dense(96, input_dim=X_train.shape[1], activation='relu'))

model.add(Dropout(rate=0.5))

model.add(Dense(96, activation='relu'))

model.add(Dropout(rate=0.5))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy', tf.keras.metrics.binary_crossentropy])

history = model.fit(X_train,

y_train,

validation_data=(X_val, y_val),

epochs=120,

batch_size=120,

verbose=1,

callbacks=callbacks,

class_weight=class_weight

)

print(f'Val auc: {roc_auc_score(y_val, model.predict_proba(X_val))}')

print(f'Test auc: {roc_auc_score(y_test, model.predict_proba(X_test))}')

# Val auc: 0.7056949806949808

# Test auc: 0.7185675253451476

plt.plot(history.history['acc'], label='train acc')

plt.plot(history.history['val_acc'], label='test acc')

plt.plot(history.history['loss'], label='train loss')

plt.plot(history.history['val_loss'], label='test loss')

plt.plot(aucCallback.val_aucs, label='auc')

plt.legend()

plt.show()

Is it normal when applying class weight to observe such difference?