Cross Validated!

I have a program that detects events in a large amount of measurement data. When it detects an event, it writes a timestamp. I have thousands of event timestamps. What I wish to do is detect if there is seasonality in the timestamps I have. I am not well versed in the terminology used, but I think seasonality is what I want to find.

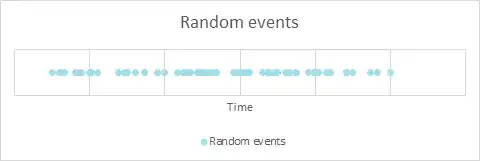

Pictures may aid my explanation. If I have a bunch of events on a timeline, as in the figure below, the events all seem to be random but there may be some kind of seasonal component to the events.

What I wish to do is detect if any of the seemingly random events are following a strict repeating interval. An illustration is given below, where we see that in the seemingly random events above there are some data points that are repeating with a fixed frequency.

I am not certain what kind of method to apply. I have looked into power spectral density, fourier transform and ARIMA, but I am still in the idea phase.

Properties of the applied method should possibly include:

- A quantitative measure of how strict or fixed the intervals are, or how certain we can be that we have detected a fixed cycle

- The ability to detect seasonality on different timescales (e.g. events occurring multiple times within the same hour or multiple times during a week with a fixed pattern)

What kind of method is applicable here?