In general a MLP should be capable for your task, but when using neural nets there are several things one should bear in mind:

there are no strict rules for adjusting the hyperparameters and the whole network structure (deep and width) because it is strongly problem dependent

therefore, fiddeling with the hyperparameters is important (you could use search algorithm instead of guessing)

due to random initialization of the network's weights, it is worth to start the training multiple times to avoid unfavorable initializations

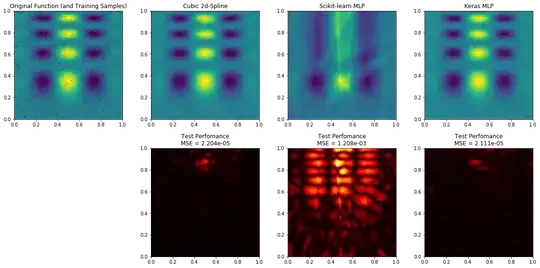

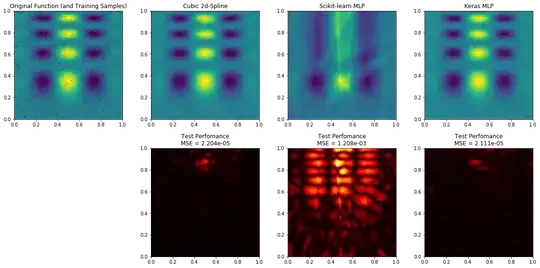

I could achieve much better results with only a few manual tries by using the deep learning library Keras:

The main problem with the implementation of scikit-learn's MLP is the tol parameter, which prevents the solvers sgd and adam from training further. Similar, the solver lbfgs stops quite early.

As one can see from the Keras loss plot, there is a plateau before the 'main learning' begins:

Furthermore you should be aware of the difference between interpolation and regression, because they address different problems. Thus SciPy griddata won't be a good choice for noisy data: How is interpolation related to the concept of regression?

My modified version of the notebook.