I can not put my company data online, but I can provide a reproducible example here.

We're modelling Insurance's frequency using Poisson distribution with exposure as offset.

Here in this example, we want to model the number of claim Claims ($y_i$) with exposure Holders ($e_i$)

In the traditional GLM model, we can dirrectly model $y_i$ and put $e_i$ in the offset term. This option is not available in xgboost. So the alternative is to model the rate $\frac{y_i}{e_i}$, and put $e_i$ as a the weight term (reference)

When I do that with a lot of iteractions, the results are coherent ($\sum y_i = \sum \hat{y_i}$). But it is not the case when nrounds = 5. I think that the equation $\sum y_i = \sum \hat{y_i}$ must be satisfied after the very first iteration.

The following code is an extreme example for the sake of reproducibility. In my real case, I performed a CV on the training set (optimizing MAE), I obtained nrounds = 1200, training MAE = testing MAE. Then I re-run a xgboost on the whole data set with 1200 iteration, I see that $\sum y_i \ne \sum \hat{y_i}$ by a large distance, this doesn't make sense, or am I missing something?

So my questions are:

- Am I correctly specify parameters for Poisson regression with offset in

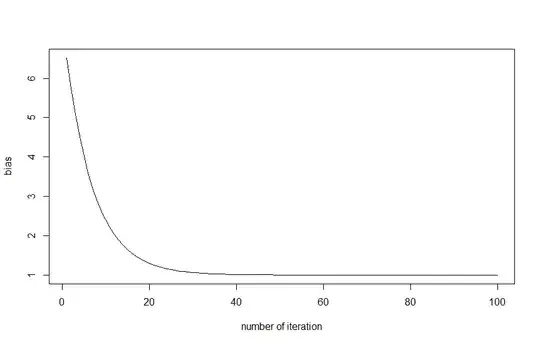

xgboost? - Why such a high bias at the first iterations?

- Why after tuning

nroundsusing xgb.cv, I still have high bias?

Here is the graphics plotting the ratio $\frac{\sum \hat{y_i}}{\sum y_i}$ by nrounds

Code edited after the comment of @JonnyLomond

library(MASS)

library(caret)

library(xgboost)

library(dplyr)

#-------- load data --------#

data(Insurance)

#-------- data preparation --------#

#small adjustments

Insurance$rate = with(Insurance, Claims/Holders)

temp<-dplyr::select(Insurance,District, Group, Age, rate)

temp2= dummyVars(rate ~ ., data = temp, fullRank = TRUE) %>% predict(temp)

#create xgb matrix

xgbMatrix <- xgb.DMatrix(as.matrix(temp2),

label = Insurance$Claims)

setinfo(xgbMatrix, "base_margin",log(Insurance$Holders))

#-------------------------------------------#

# First model with small nround

#-------------------------------------------#

bst.1 = xgboost(data = xgbMatrix,

objective ='count:poisson',

nrounds = 5)

pred.1 = predict(bst.1, xgbMatrix)

sum(Insurance$Claims) #3151

sum(pred.1) #12650.8 fails

#-------------------------------------------#

# Second model with more iteractions

#-------------------------------------------#

bst.2 = xgboost(data = xgbMatrix,

objective = 'count:poisson',

nrounds = 100)

pred.2 = predict(bst.2, xgbMatrix)

sum(Insurance$Claims) #3151

sum(pred.2) #same

nrounds = 1to be equivalent to fitting a tree on the data, so sum of observations should be equal to sum of predictions. Am I missing something? – Metariat Jan 25 '18 at 11:10nroundsequal to ~ 1200 (training and testing mae inside the training set are almost equal). But when I fitxgboostwith 1200 iterations and check out the sum of the predictions, it is not equal to the sum of observations. And the MAE in the real test set is actually very high compare to the MAE in the CV test set. – Metariat Jan 26 '18 at 09:04nrounds = 1. Might want to reword the question and title with that in mind, since convergence has little to do with it. – jbowman Jan 26 '18 at 17:04