When is first differences for time series trend removal appropriate to use?

If you are dealing with cumulative sums of stationary series, differencing is a natural transformation to perform. Cumulative sums are nonstationary, have infinite variance and thus generally misbehave when used in linear regression, correlation analysis and similar.

One exception where differencing removes valuable information is in the context of cointegrated time series. That is, when a few integrated series share a common stochastic trend (or a few), this commonality will be removed by simply differencing each of the series, and linear models built by ignoring cointegration will suffer from omitted variable bias due to the omitted error correction term.

Meanwhile, taking the first difference of a stationary series (or a stationary series plus a deterministic time trend) is a rather redundant transformation. It introduces an integrated moving average component in the resulting transformed series which increases the variance in linear models as compared with linear models for the original series in their levels (adjusted for a deterministic time trend, if any).

would it be equally appropriate for me to take first differences on the original time series (as given above)?

The paragraph above should answer this.

Now, since the two plots appear to be autocorrelated, first differences does not appear suitable (at least I do not think it is <...>).

Autocorrelation and first differencing are tangential, except under the presence of a unit root when autocorrelation is extremely high and differencing is a natural transformation. But in general, you may have something like an ARIMA(p,1,q) model which would require differencing but still exhibit autocorrelation due to the AR and MA terms.

Edit (responding to a comment)

but what if I don't know if my original time series are stationary?

You can test for various forms of nonstationarity. E.g. you can test for a unit root (which is one form of nonstationarity) by the augmented Dickey-Fuller test. You can also test for structural change etc. Also, sometimes you will notice visually that the series behaves differently in different periods, which is an informal indication of nonstationarity.

is there an easy way to check if two time series are cointegrated? The tests I've seen so far seem to require checking for statistical significance etc. so I was hoping for a slightly easier and more efficient way.

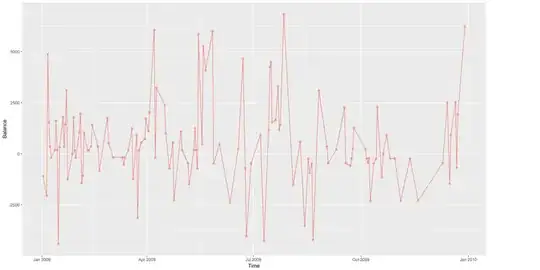

Perhaps the best you can do without formal testing is running a regression of one series on the other one (or other ones) and visually inspecting the residual. If the residual looks stationary, the series are cointegrated. But also formal testing is not that difficult now that there are functions in statistical packages that do that.