I'm still a bit confused about the question is the decision boundary of a logistic classifier linear? I followed Andrew Ng's machine learning course on Coursera, and he mentioned the following:

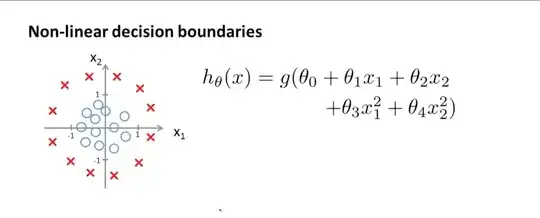

It seems to me there is no one answer, it depends on the linearity or non-linearity of the decision boundary, and that depends on the hypothesis function defined as $H_\theta(X)$ where $X$ is the input and $\theta$ are the variables of our problem.

Could you please help me to solve this doubt?