I am setting up a Cox model, to model the probability that new customers leave the bank .

I had a nice set up, with plenty of significant explanatory variables, until the result of one categorical variable troubled me. The categorical variable I have called Depth with categories Only Bank, Bank+Pension and Bank+Pension+Insurance. So if the new customer only made a new account, he falls under that first category. If he also took with him his pension, he falls under the second, and if he added to that bought some insurance at the bank, he falls under the third category.

The common belief in the bank is that the "deeper" the customers are, the more likely are they to stay. This is also intuitive. If a customer has both insurance and pension added to the normal bank account, he would not bother with the hassle of changing company.

Looking at all the customers, here is an overview over how the Depth customers "dies" (Failed means that they left, and Censored means that they have not left after a year):

We see from the data that slightly more Only Bank customers are "censored" (meaning they are staying) than then Bank+Pension customers. This is not what I expected. We expected more Bank+Pension customers would be staying. Anyway, I suspect that this difference is not significant, and that it actually is no difference in the hazard rate between the two first categories.

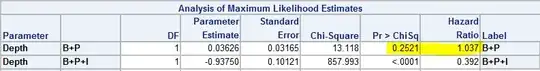

My question/problem is that if I do a Cox regression with only one explanatory variable, being the Depth variable, there is actually no significant difference between the two first cateogories (p-value 25%) .

But when I add new explanatory variables (even just one) suddenly the difference becomes significant (really small p-values) and my regression results tell me that Bank+Pension customers have a higher hazard rate than Only Bank customers. Here is an example, where I added a binary variable X (not correlated to Depth):

Now my result tells me that Bank+Pension customers have a higher hazard rate! This is bad!

I have no idea what is going on here. What is going wrong in the estimation process here? Any explanation is recieved with great gratitude. I suspect it has something to do with the great difference in observations between the categories, but I am a newbie here...

EDIT: from link #4 @Scortchi provided below, I copy paste the following:

Misspecified models: The underlying theory for t-statistics/p-values requires that you estimate a correctly specified model. Now, if you only regress on one predictor, chances are quite high that that univariate model suffers from omitted variable bias. Hence, all bets are off as to how p-values behave. Basically, you must be careful to trust them when your model is not correct. -- I see that I obviously have sinned, testing my predictor of annoyance with regressing on only that predictor.