Let $Y_1, Y_2, ..., Y_n$ be iid random variables and $B_1, B_2, ..., B_n$ be Borel sets. It follows that

$P(\bigcap_{i=1}^{n} (Y_i \in B_i)) = \Pi_{i=1}^{n} P(Y_i \in B_i)$...I think?

If so, does the converse hold true? My Stochastic Calculus professor says it does (or maybe misinterpreted him somehow?), but I was under the impression that independence of the n random variables was equivalent to saying for any indices $i_1, i_2, ..., i_k$ $P(\bigcap_{j=i_1}^{i_k} (Y_j \in B_j)) = \Pi_{j=i_1}^{i_k} P(Y_j \in B_j)$.

So, if the RVs are independent, then we can choose $i_j=j$ and k=n to get $P(\bigcap_{i=1}^{n} (Y_i \in B_i)) = \Pi_{i=1}^{n} P(Y_i \in B_i)$, but given $P(\bigcap_{i=1}^{n} (Y_i \in B_i)) = \Pi_{i=1}^{n} P(Y_i \in B_i)$, I don't know how to conclude that for any indices $i_1, i_2, ..., i_n$ $P(\bigcap_{j=i_1}^{i_k} (Y_j \in B_j)) = \Pi_{j=i_1}^{i_k} P(Y_j \in B_j)$, if that's even the right definition.

p.17 here seems to suggest otherwise. idk

Help please?

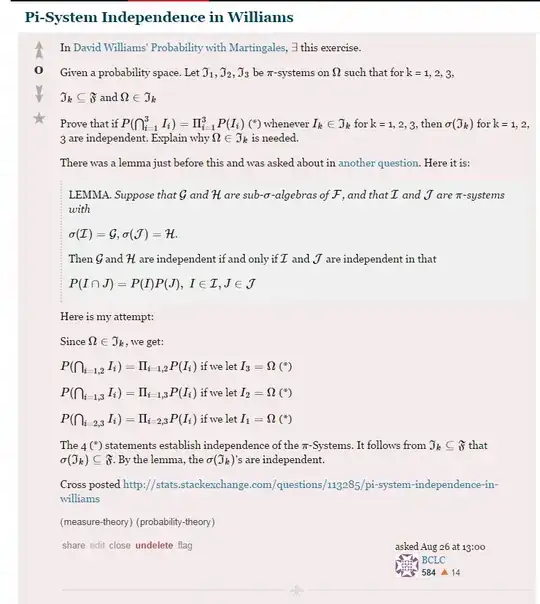

Also this:

or

So, this answer is to use the Omega part to establish pairwise independence and ultimately conclude independence. Without that assumption, we cannot conclude independence. Is that right? Why does that not contradict the definition of independence: $P(\bigcap_{i=1}^{n} (Y_i \in B_i)) = \Pi_{i=1}^{n} P(Y_i \in B_i)$ ?