This question is a follow-up from another question where the accepted answer states that for each Update Records action in a process, following limits are consumed:

As you can therefore conclude, it is 1 query and 1 DML statement per chunked transaction.

I was running some benchmark tests with process builder in a brand new dev org that has no other automations running. The process itself is super simple, firing on every account insert/update and updating 1 field:

I was then inserting a list of 200 accounts:

List<Account> accounts = new List<Account>();

for(Integer i = 0; i < 200; i++){

Account a = new Account(Name='Account '+i);

accounts.add(a);

}

insert accounts;

The logs show the following:

1 DML and 200 DML rows are used by the initial insert, the rest is caused by the process. 1 SOQL and 200 SOQL is in line with what's expected. However, that's leaving 2 DML and 200 DML rows.

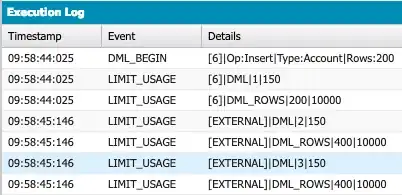

The execution log is showing the following:

At the very end, a DML is used with no additional rows consumed.

I then did the same test, but now inserting 199 accounts. Now the process consumes 1 DML and 199 DML rows. Testing with 201 accounts, I get these results: 3 DML and 201 DML rows. I get similar results around the 400 - 600 - ... treshold.

To me, it looks like when arriving at the 200 records mark, Salesforce is already enqueuing the next chunk of records, which consumes a DML, without checking if there really are more records to process? Then if there are any, that's one more DML. Who can shed some light on this?

insert accounts;line of code, and then when it goes into the Process Builder, another 200 DML actions happen because technically 200 records are being updated at the PB level. Anytime a database record is inserted, updated, or deleted, a DML Row is counted – Brian Miller Feb 26 '19 at 14:18