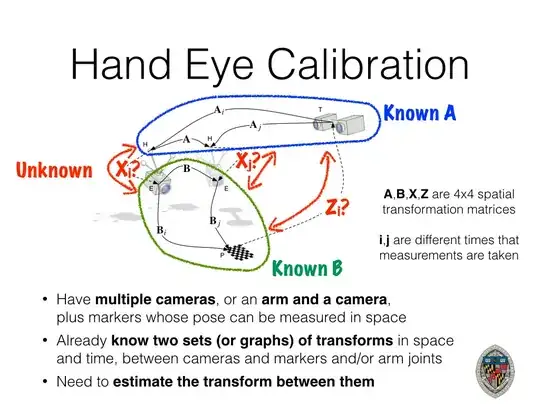

I'm trying to use a dual quaternion Hand Eye Calibration Algorithm Header and Implementation, and I'm getting values that are way off. I'm using a robot arm and an optical tracker, aka camera, plus a fiducial attached to the end effector. In my case the camera is not on the hand, but instead sitting off to the side looking at the arm.

The transforms I have are:

- Robot Base -> End Effector

- Optical Tracker Base -> Fiducial

The transform I need is:

- Fiducial -> End Effector

I'm moving the arm to a series of 36 points on a path (blue line), and near each point I'm taking a position (xyz) and orientation (angle axis with theta magnitude) of Camera->Fiducial and Base->EndEffector, and putting them in the vectors required by the HandEyeCalibration Algorithm. I also make sure to vary the orientation by about +-30 degrees or so in roll pitch yaw.

I then run estimateHandEyeScrew, and I get the following results, and as you can see the position is off by an order of magnitude.

[-0.0583, 0.0387, -0.0373] Real [-0.185, -0.404, -0.59] Estimated with HandEyeCalib

Here is the full transforms and debug output:

# INFO: Before refinement: H_12 =

-0.443021 -0.223478 -0.86821 0.321341

0.856051 -0.393099 -0.335633 0.470857

-0.266286 -0.891925 0.36546 2.07762

0 0 0 1

Ceres Solver Report: Iterations: 140, Initial cost: 2.128370e+03, Final cost: 6.715033e+00, Termination: FUNCTION_TOLERANCE.

# INFO: After refinement: H_12 =

0.896005 0.154992 -0.416117 -0.185496

-0.436281 0.13281 -0.889955 -0.404254

-0.0826716 0.978948 0.186618 -0.590227

0 0 0 1

expected RobotTipToFiducial (simulation only): 0.168 -0.861 0.481 -0.0583

expected RobotTipToFiducial (simulation only): 0.461 -0.362 -0.81 0.0387

expected RobotTipToFiducial (simulation only): 0.871 0.358 0.336 -0.0373

expected RobotTipToFiducial (simulation only): 0 0 0 1

estimated RobotTipToFiducial: 0.896 0.155 -0.416 -0.185

estimated RobotTipToFiducial: -0.436 0.133 -0.89 -0.404

estimated RobotTipToFiducial: -0.0827 0.979 0.187 -0.59

estimated RobotTipToFiducial: 0 0 0 1

Am I perhaps using it in the wrong way? Is there any advice you can give?