Well, a good datapoint here may be the Zuse Z3 and Z4 computers. Not at least as their workings are close related to today's computers in being tact controlled as well as using binary floating point arithmetics.

The 1941 Z3 operated at a clock frequency of 5.3 Hz needing 0 to 20 clocks per instruction (9..41 for FP/Decimal conversion):

| Operation |

Clocks |

Instr./sec |

Instr./h |

| Load/Store |

1 |

5,3 |

19,080 |

| Addition |

3 |

1.8 |

6,360 |

| Subtraktion |

4–5 |

1.1-1.3 |

3,960-4,680 |

| Multiplikation |

16 |

0.33 |

1,192 |

| Division |

18 |

0.3 |

1,060 |

| Squareroot |

20 |

0,27 |

954 |

All of that with 22 bit binary FP.

The 1948 Z4 follows the same basic structure, but adds a lot of additional instructions - which still operate speed wise in the same region.

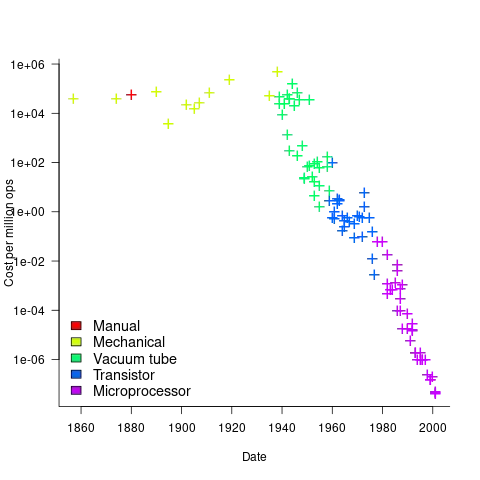

The Z4 might also give a practical data point regarding the above 'Cost Per Millon Operations' scale, as it was the first commercially used computer, rented out to paying customers at 0.01 Swiss Franks (CHF). That's about 2.336 USD (of 1948) for 1 Million Operations, a number ending up somewhere in the middle of all those vacuum tube machines - assuming its about contemporary USD (*1).

Likewise the 1953 Z5 but it als cranks up (*2) clock frequency to 40 Hz. It delivered about 6-7 times the performance of a Z3/Z4 (*3), all while extending FP Format to 36 bit for extended precision.

*1 - Well, beside that graph not mentioning what currency is used, it also doesn't note if those numbers are inflation adjusted or not. It also doesn't show a distinct 'relay' category, so one may assume them being rather part of the data points for 'tube'.

*2 - Quite literally as the clock generator was a motor driving a disk operating contacts for instruction phases.

*3 - The speed increase is all due faster relay based memory. While the Zuses may have been the first example of memory being the main limiter for speed, the phenomena happened many times over in later generations :))