We optimize large-scale optimization problems with tens of thousands of variables and constraints with Cvxpy + Commercial solvers (e.g. Gurobi, Mosek).

The coefficient range easily exceeds the recommended bounds of [1e-3, 1e+6], which eventually leads to numerical instability. This is the pain-point.

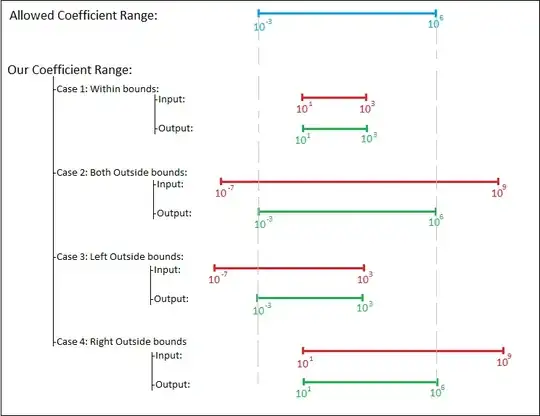

As a resolution, we wanted to scale the coefficients of the optimization problem (specifically linear constraints: A@x <= b, where $A\in\Bbb R^2$, $b\in\Bbb R$), such that all scaled coefficients lie within [1e-3, 1e+6].

E.g.

- Raw constraint: $10^5x_1 + 10^7 x_2 \le 10^9$

- Now, using Scaling factor = $10^5$ and Dividing both sides by scaling factor

- Scaled constraint: $x_1 + 10^2 x_2 \le 10^4$

In general, the Scaling factor should behave like:

So, we were curious on:

- What should be the (row-wise) scaling factor vector $α = [α_1, α_2, ...]$ such that $(A/α) @ x \le (b/α)$ is a scaled constraint (i.e. all coefficient within [1e-3, 1e+6]) ?

Note: Here $α_i$ is scaling factor for the i-th row, i.e. $A_i @ x \le b_i$)