The main differences probably are that there is a somewhat large overhead you have to pay when solving the AP as a linear program: You have to build an LP model and ship it to a solver. In addition, an LP solver is a generalist. It solves all LP problems and focus in development is to be fast on average on all LPs and also to be fast-ish in the pathological cases.

When using the Hungarian method, you do not build a model, you just pass the cost matrix to a tailored algorithm. You will then use an algorithm developed for that specific problem to solve it. Hence, it will most likely solve it faster since it is a specialist.

So if you want to solve an AP you should probably use the tailored algorithm. If you plan on extending your model to handle other more general constraints as well, you might need the LP after all.

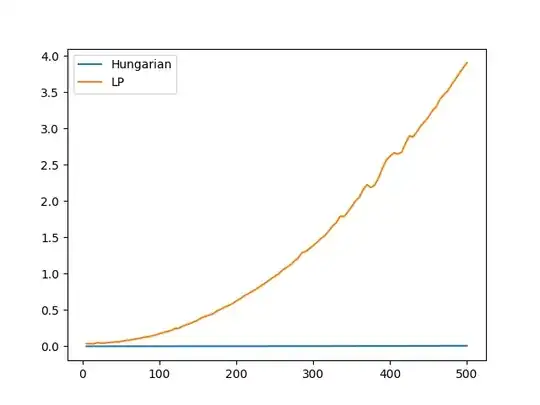

Edit: From a simple test in Python, my assumption is confirmed in this specific setup (which is to the advantage of the Hungarian method, I believe). The set up is as follows:

- A size is chosen in $n\in \{5,10,\dots,500\}$

- A cost matrix is generated. Each coefficient $c_{ij}$ is generated as a uniformly distributed integer in the range $[250,999]$.

- The instance is solved using both

linear_sum_assignment and as a linear program. The solution time is measured as wall clock time and only the time spent by linear_sum_assignment and the solve function is timed (not building the LP and not not generating the instance)

For each size, I have generated and solved ten instances, and I report the average time only.

And then there is of course the "but". I am not a ninja in Python and I have used pyomo for modelling the LPs. I believe that pyomo is known to be slow-ish whenbuilding models, hence I have only timed the solver.solve(model) part of the code - not building the model. There is however possibly a hugh overhead cost coming from pyomo translating the model to "gurobian" (I use gurobi as solver).

A plot of the computation time (in seconds) as a function of the instance size is given below, and it is pretty clear, that the Hungarian algorithm implemented in linear_sum_assignment is a lot faster than the pyomo+gurobi approach: