Historically, there were several competing technologies using ring structures like Token Ring or FDDI, but due to higher cost, lower performance, or simply slower development they've all vanished.

Modern, ubiquitous Ethernet uses switches to bridge all network ports together, so any ring or other looped topology creates a bridge loop, bringing down the network unless it is explicitly dealt with. Redundant links require a means to mitigate the bridge loops they form, most prominently by blocking redundant ports through a spanning tree protocol (MSTP, RSTP, obsolete STP or proprietary RPVST+) or by using routing algorithms with switching (Shortest Path Bridging or TRILL).

Accordingly, the 'native' topology for Ethernet is a tree (sometimes called a 'multi-star'). Advantages of tree vs ring include: smaller network diameter, higher efficiency, lower latency.

Using a fat tree, with increasing bandwidth towards the root, that topology can also be scaled extremely well. (Just imagine 100 switches in a three-tier tree - or in a ring...)

A ring network with one of the links blocked by xSTP:

If one of the switches fails, the blocked link changes to forwarding and five switches continue. If another switch dies, the network breaks in half.

The diameter of that network is five hops, delays accordingly. All traffic between non-adjacent switches needs to cross all intermediate switches and links - if there's no ample bandwidth, congestion is more likely than in a tree. Adding more switches increases that problem. Also, more than seven switches can exceed STP's design limit and might not (re)converge.

A tree network (collapsed core):

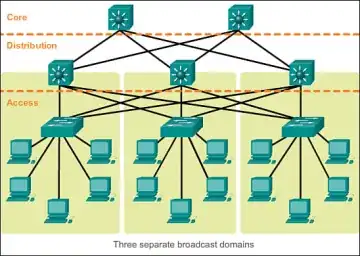

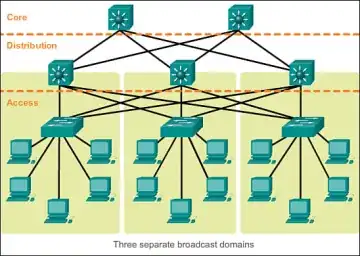

If one of the access switches fails, nothing else happens. If the single core switch dies, everything is offline. If there's a redundant core switch then that network is hard to bring down.

Note the diameter of just two hops. That diameter doesn't even change when you add some more switches. If you need to add more switches than the core can connect, then you add a distribution tier. That way, the tree can easily grow to more than 1500 switches (of 40+ ports) with a diameter of just four.

A three-tier hierarchical network from Cisco Networking Academy Connecting Networks Companion Guide: Hierarchical Network Design

PS: The way that question was asked made me think of the data link layer only. On the network layer, routers can make much better use of meshed networks than bridges/switches.

While you can more easily use any network-layer topology you like and that makes sense for you, the diameter, efficiency and latency arguments from above also apply. However, there may be more important aspects when designing your network. A ring of (few) core routers can make a lot of sense for a large network.