Given matrices

$$A_i= \biggl(\begin{matrix} 0 & B_i \\ B_i^T & 0 \end{matrix} \biggr)$$

where $B_i$ are real matrices and $i=1,2,\ldots,N$, how to prove the following?

$$\det \big( I + e^{A_1}e^{A_2}\ldots e^{A_N} \big) \ge 0$$

This seems to be true numerically.

Update1: As was shown in below, the above inequality is related to another conjecture $\det(1+e^M)\ge 0$, given a $2n\times 2n$ real matrix $M$ that fulfills $\eta M \eta =-M^T$ and $\eta=diag(1_n, -1_n)$. The answers of Christian and Will, although inspiring, did not really disprove this conjecture as I understood.

Update2: Thank you all for the fruitful discussion. I attached my Python script down here. If you run it for several times you will observe

$\det(1+e^{A_1}\ldots e^{A_N})$ seems to be always larger than zero (the conjecture),

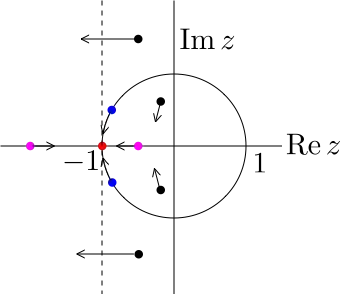

$M = \log(e^{A_1}\ldots e^{A_N})$ are sometimes indeed pure real and it fulfills the condition mentioned in update1. In this case the eigenvalues of $M$ are either pure real or in complex conjugate pairs. Thus it is easy to show $\det(1+e^M)=\prod_l(1+e^ {\lambda_l})\ge 0$,

However, sometimes the matrix $M = \log(e^{A_1}\ldots e^{A_2})$ can be complex and they are indeed in the form written down by Suvrit. In this case, it seems that the eigenvalues of $M$ will contain two sets of complex values: $\pm a+i\pi$ and $\pm b + i\pi$. Therefore, $\det(1+e^{M})\ge 0$ still holds because $(1-e^a)(1-e^{-a})(1-e^{b})(1-e^{-b})\ge 0$.

Update3: Thank you GH from MO, Terry and all others. I am glad this was finally solved. One more question: how should I cite this result in a future academic publication ?

Update4: Please see the publication out of this question at arXiv:1506.05349.