There are two agents $i=1,2$. The state $k$ is governed by $\tau_i\in[0,1]$ where \begin{align} \dot{k} = f(k,\tau_1,\tau_2). \end{align}

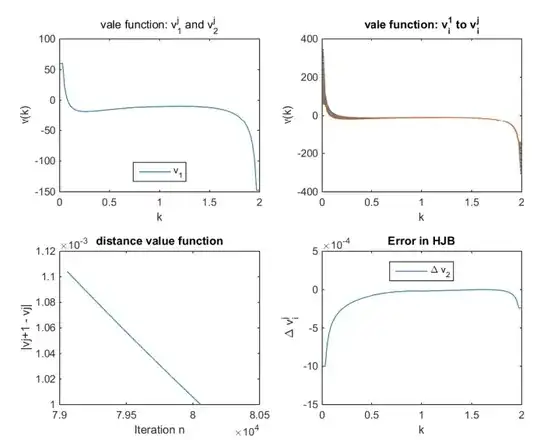

Define the value function of player $i$ by \begin{align} v_i(k) := \sup_{\tau_i}\int^\infty_0{e^{-\rho t}F(k,\tau_i,\sigma_{-i})dt}. \end{align} where $\sigma_{-i}(k)$ is the policy function or a markovian stratgey profile of player $-i$. And $\rho>0$ is the time preference rate.

The Hamilton-Jacobi-Bellman equation reads \begin{align} \rho v_i(k) = \sup_{\tau_i}\{F(k,\tau_i,\sigma_{-i}) + v'(k)f(k,\tau_i,\sigma_{-i})\}. \end{align}

Now assume I approximated a function $\hat{v}_i$ by value function iteration. How do I know that it's the true value function?